Concurrency with csharp

Notes from the course of https://www.educative.io/courses/c-sharp-concurrency-for-senior-engineering-interviews

Getting in context

A basic example of using threads is the following:

Let’s say that we want to sum the numbers from 1 to Int32.MaxValue. If you only use one thread, you will have a slower result instead of generating two threads. Let’s see an example

1 |

|

The runMultiThreadTest method will run almost half the time of the runSingleThreadTest method. Usually, this result will be similar if CPUs support multiple threads. This task is often used with tasks such as Network or Disk IO.

Problems with Threads

- It’s usually very hard to find bugs.

- Higher cost of code maintenance

- Increase utilization of system resources.

- Programs may experience a slowdown.

Basic Definitions

Program

A program is a set of instructions and associated data that resides on the disk and is loaded by the operating system to perform a task.

Process

A process is a program in execution. A program can have several copies of it running simultaneously, but a process necessarily belongs to a single program.

Thread

A thread is the smallest unit of execution in a program witch simply executes instructions serially. The program can have several threads running simultaneously.

Process

----------------------------- Have

Global Variables

----------------------------- And have several threads

⇣ ⇣ ⇣

Thread Thread Thread

Local Local Local

Variables Variables Variables

Code Code Code

The execution of the threads is not sequential. The threads are independent of each other and run in parallel. So, it’s important to be careful when using global variables because they can be accessed by different threads and overwrite each other.

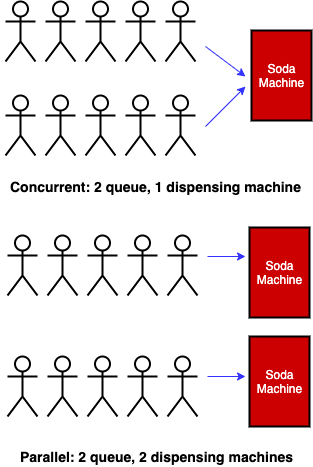

Definition between Concurrency vs Parallelism

It looks like a similar term, but it doesn’t.

A concurrent system is a system that executes concurrent programs that can be decomposed into several parts and each of those parts can be executed in any order without affecting the output.

That concurrent system can have several programs running simultaneously or in progress but this doesn’t mean that they are running at same time. If one is in progress and the rest of then are under suspension mode. The idea with the concurrent systems is maximize throughput and minimize latency.

And the parallelism is the ability to execute several programs at the same time in parallel. Usually, this is achieved by using hardware resources with multiple CPU cores.

In conclusion, the concurrent system does not need to run in parallel, but a parallel system is indeed concurrent.

Reference: https://alvinalexander.com/photos/parallelism-vs-concurrency-programming

Models of multitasking

There are two common models of multitasking, the Cooperative and Preemptive multitasking, which are described below.

The preemptive multitasking model is when the operating system preempts a program to allow another waiting task to run on the CPU. This is done by the operating system, not the program, so they can not decide how long or when they can use the CPU.

The Cooperative multitasking model is when the operating system does not preempt a program to allow another waiting task to run on the CPU. This is done by the program, not the operating system, so the program can decide how long or when it can use the CPU.

The cooperative multitasking model is the most dangerous because if you have malicious programs, it can run in an infinite loop and won’t allow other programs to run. Right now, all moderns operating systems are preemptive.

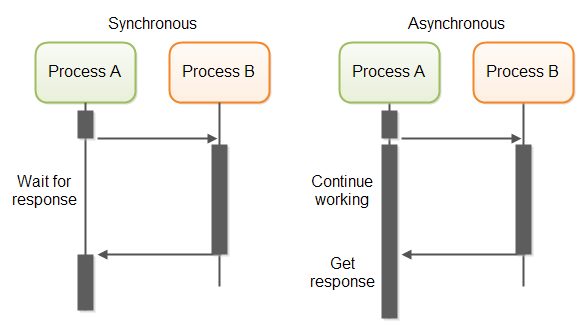

Synchronous vs Asynchronous

The syncronous execusion is when you run line-by-line code. If you have a long-running task, it might block the rest of the code until it is finished.

And the asynchronous execution is when you run code in a different thread. The async code does not need to run in a sequence, for example, if the program calls an Async function, it can follow the execution of the code and it doesn’t need to wait for the function response invoked to continue to run.

To get the result, we can use several options such as callback methods or promises. The Async code is very handy if you need to perform an extensive network or disk I/O process and it can take some time.

I/O bound and CPU bound

When you are running a program, it can consume computer resources. The program can use CPU Time, Memory, Networking Resources and Disk storage. So, when that program is running is compute-intense and you see there is a high CPI usage (close to 100%), you can say the program is CPU bound.

But, there is a limit of CPU units available. If you split that compute-intense process and execute it in parallel, you might finish the process in half of the time. But, you have an overhead of creating two threads and merging the result.

The I/O bound is when you have programs that require a good amount of resources from main memory or network interfaces to the CPU. So, the CPU and the main memory are physically separate, and there is a transfer distance and time meanwhile that information moves from one to the other. That is an I/O bound process.

The throughput vs Latency

The throughput is the number of operations that can be performed in a given time. For example, if you have a program that has to download several files from a web server, the number of files downloaded is the throughput.

The latency is the time it takes to perform an operation. With the previous example, the latency is the time it takes to download a file.

In this case, if you download files sequentially, it will take more time because you need to wait for the previous file to be downloaded. But, if you download them in parallel, it will take less time because you can download several files in parallel. In a multithreaded implementation, the throughput will go up, and the latency will go down.

The Critical Section and the Race Conditions

As I explain in the thread example, working with multi-thread applications can cause bugs that are very difficult to find. In this part, you’ll see how wrong synchronization in a critical section can cause race conditions and generate error codes.

If you are working with an application that can support working with threads, this program can run various program sections. However, you should take more care when threads from the same process try to run the same part of the code at the same time.

The Critical Section is the section of code that can be executed concurrently by multiple threads and exposes any shared data or resources used by the application.

And the Race Condition happens when you run threads that run in critical sections without thread synchronization. The threads access shared resources or program variables that can be changed by other threads at the same time.

Let’s see the following example.

1 |

|

If you run this code, the output will be:

1 | // ⇒ dotnet run |

if we want to run this in parallel with two threads insterad of one, we can use the following code:

1 | public static void RunMultipleThread() |

This strange output is because each thread is accessing the same variable x, and the value of x is changing, we going to have different output response. It actually can change with each run.

Any thread can be at any step in this process at any time, and they can step on each other when a shared resource is involved. The state of x can be changed by another thread during the time between x is being read and when it is written back.

Let’s say a thread retrieves the value of x, but hasn’t stored it yet. Another thread can also retrieve the same value of x (because no thread has changed it yet) and then they would both be storing the same value (x+1) back in x!

1 | Thread 1: reads x, value is 7 |

Source: https://stackoverflow.com/a/34745

Those Race conditions can be avoided by employing some sort of locking mechanism before the code that accesses the shared resource:

1 | static class RaceCondition |

Now we can get a better output.

The Deadlock, liveness and Reentrant Locks

There are some concepts related with concurrency to take a look at.

The deadlock is when two or more threads are waiting for each other to finish their work.

The liveness is the capacity of a program to execute in a finite amount of time. If the program experiences a deadlock, that program it’s not exhibiting liveness.

The Live-lock is the situation in which two or more processes continuously change their states in response to changes in the other process(es) without doing any useful work.

The Starvation is when a process is waiting for a resource that is not available. In other words, it never gets CPU time or access to shared resources.

Mutex Vs …

Now that we have defined several concepts related to concurrency, let’s take a look at the different types of locks and signaling in multi-threaded applications.

The Mutex or Mutual Exclusion is a synchronization mechanism that allows only one thread to access a shared resource at a time. As we have seen before, the mutex allow us access to the variable x in the RaceCondition class through several threads at the same time.

The mutex pretty much blocks the rest of the access threads to the variable x at the same time. Once the thread is released, it can access the x variable again.

On the other hand, there is Semaphore which allows us to limit access to a collection of resources. Let’s think that the traffic light as a set of permissions to access the shared resource and any new thread that wants to access the resource is blocked until the semaphore is released.

A good example of a semaphore is pool connections in a database. Let’s assume the database supports 10 connections, but there are 50 threads waiting for a connection. The semaphore only allows 10 threads to access the database at the same time, when any current thread finishes its work, it will release the semaphore and allows another thread to access the database.

So, what is the difference between a mutex and a semaphore? well.. In the mutex, the** same thread must call acquire and subsequent release** the Mutex, and in the semaphore, different threads can call acquire and subsequent release the semaphore.

Modern C# Concurrency: Tasks and Async/Await

While understanding Thread and Mutex is fundamental, modern C# development heavily relies on higher-level abstractions provided by the Task Parallel Library (TPL) and the async/await keywords. These tools simplify concurrent and parallel programming significantly.

The Task Parallel Library (TPL)

The TPL abstracts away the direct management of threads. Instead of creating a Thread, you create a Task. The TPL manages a pool of threads and schedules your tasks onto them efficiently.

Let’s rewrite our initial CPU-bound example using Task.Run:

1 | public class TplExample |

This code is cleaner and more efficient, as it leverages the shared thread pool instead of creating new, expensive threads from scratch.

Async and Await for I/O-Bound Operations

The async and await keywords are syntactic sugar that revolutionized asynchronous programming in C#. They allow you to write asynchronous code that looks and feels synchronous, without blocking threads.

This is the preferred way to handle I/O-bound work.

1 | public class AsyncAwaitExample |

When await is hit, control returns to the caller, and the UI (if in a UI app) or the web server (if in an ASP.NET app) remains responsive. This is the key to building scalable and responsive applications. By not blocking threads while waiting for I/O, a web server can handle thousands of concurrent requests with a small number of threads.