Preparation for the AZ-204 exam

https://docs.microsoft.com/en-us/learn/certifications/exams/az-204

This is my learning path for the AZ-204 preparation examen.

Locations

| Locations | Locations |

|---|---|

| * westus2 | * southcentralus |

| * centralus | * eastus |

| * westeurope | * southeastasia |

| * japaneast | * brazilsouth |

| * australiasoutheast | * centralindia |

Basic Azure CLI commands

There are several ways to create a new Azure resource. The Azure Portal is the most common way to create a resource with a rich UI; it guides you throw it quickly and step by step. Another tool to create resources programmatically is the Azure PowerShell and Azure CLI to create a resource.

https://github.com/Azure/azure-functions-core-tools#installing?azure-portal=true

1 | -- Set defaults |

In business, to build high-quality applications and services, you need to implement a good business process.

The Business processes modeled in software are often called workflows. Azure includes different technologies that can be used to build and implement workflows and integrate multiple systems:

- Logic Apps

- Microsoft Power Automate

- WebJobs

- Azure Functions

All those technologies can accept inputs, run actions with several conditions and produce outputs.

The Design-first approach

With this approach, you can start with a design of the workflow with Logic Apps and Microsoft Power Automate. It’s like a tool to draw out the workflow.

Logic Apps provides a service to automate, orchestrate and integrate disparate components of a distributed application. It allows you to create complex models of workflows throw the designer or the code using JSON notation. You can connect over 200 connectors or extensions and you can create your own.

Microsoft Power Automate is a service to create workflows with no development or IT Pro experience. The type of flows you can create are Automated (By a trigger from some event), Button (Runs on demand), Scheduled (Runs at a specific time), and Business process (To build a business process). Easy to use the tool and behind the scenes it’s powered by Logic Apps.

Why Choose a design-first approach?

The principal question here is who will design the workflow: will it be developers or users?

The Code-first approach

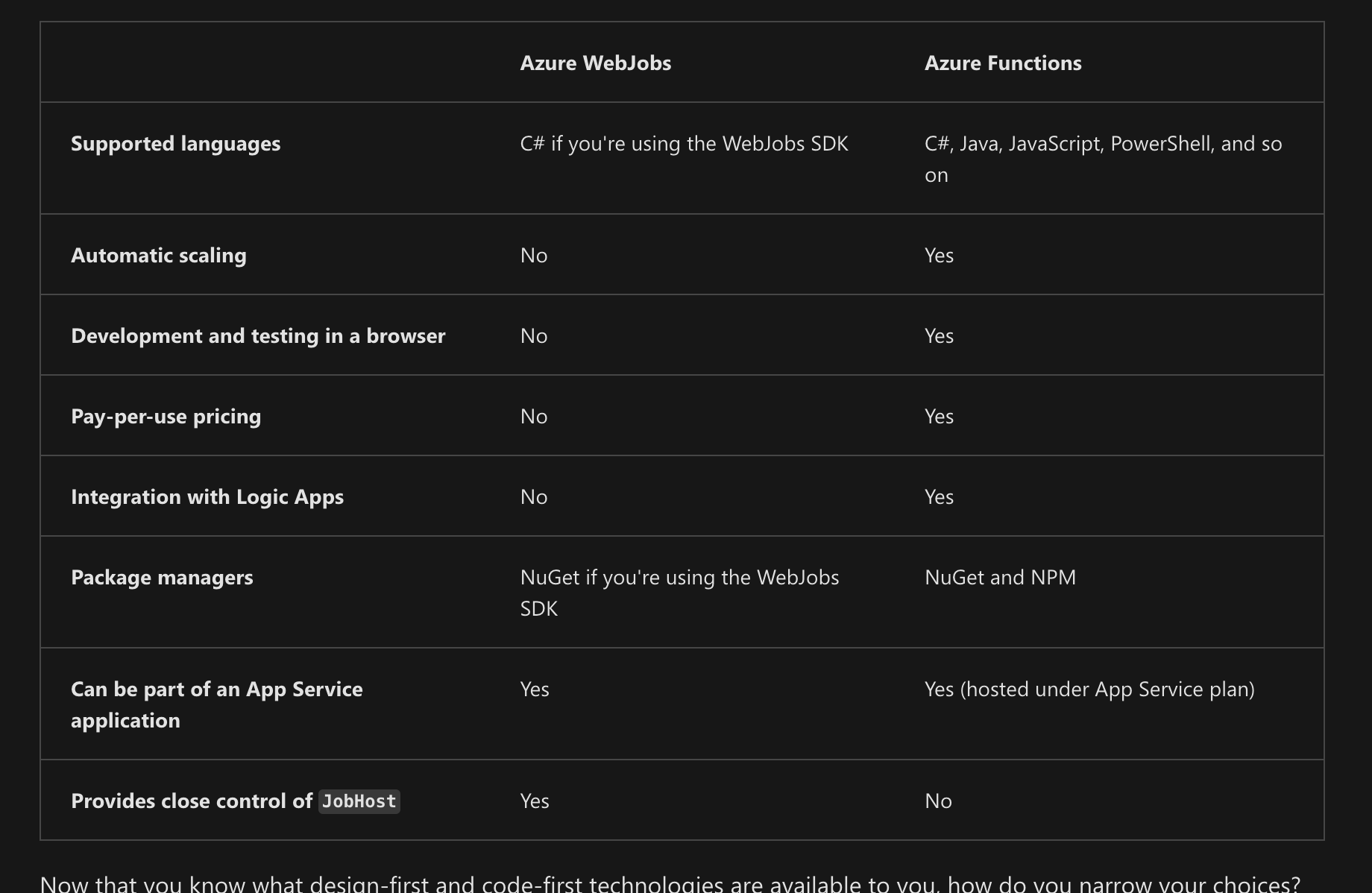

With this approach, you can code all the workflows and you can have more control over the performance or if you need to write custom code. There are two services to handle this approach:

WebJobs and the WebJobs SDK.

- The Azure App Service is a cloud-based hosting service for web applications.

- The WebJobs are a part of the Azure App Service more focused to run a program or script automatically. It can be continuous or triggered.

Azure Functions is a simple way to run small pieces of code in the cloud without the need to develop a web application. You only pay for the time when the code runs.

- HTTPTrigger. When you want the code to execute in response to a request sent through the HTTP protocol.

- TimerTrigger. When you want the code to execute according to a schedule.

- BlobTrigger. When you want the code to execute when a new blob is added to an Azure Storage account.

- CosmosDBTrigger. When you want the code to execute in response to new or updated documents in a NoSQL database.

The Azure Functions allow developers to host business logic in the cloud, it’s like a function or small piece of code. The good thing about this approach is that you don’t need to worry about the infrastructure. It’s run on serverless computing, which means Azure manages all the provisioning and maintenance of the infrastructure. The Manager automatically scaled out or down depending on load.

Serverless computing can be interpreted as a function as a service (FaaS) or a microservice. You can find in Azure two approaches to run your code: Azure Logic Apps and Azure Functions.

The Azure Functions is a serverless application that you can white functions code in languages like C#, F#, Javascript, Python and PowerShell Core. Support packages managers like NuGet and NPM. There are some characteristics that you need to know:

- Not reserved time. It’s only charged based on what is used. So, you don’t need to allocate a full Virtual Machine server and configure it.

- Stateless logic, which means created and destroyed on demand.

- Event-driven, which means that only responds to events called a “trigger”, it can be an HTTP request or a message being added to a queue.

- That functions can be deployed into a non-serverless environment. Which means it’s not fully associated with Azure.

- There is an Execution Timeout of 5 minutes. Max 10 minutes with configuration. If the request it’s triggered by HTTP, the timeout will be of 2.5 minutes. Alternative Durable Functions

- Under high load, hosting the function on VM can be cheaper.

For the Azure Service, you can choose two service plans:

- Consumption plan: It provides automatic scaling and bills only when your functions are running. This plan includes by default time out of 5 minutes and can be increased to 10 minutes.

- Azure App Service Plan: With this plan, you can configure the time out of the function and can configure the function to run indefinitely.

That function needs to be attached to a storage account for internal operations like logging functions executions and so on. So, you can select an existing one or create a new one.

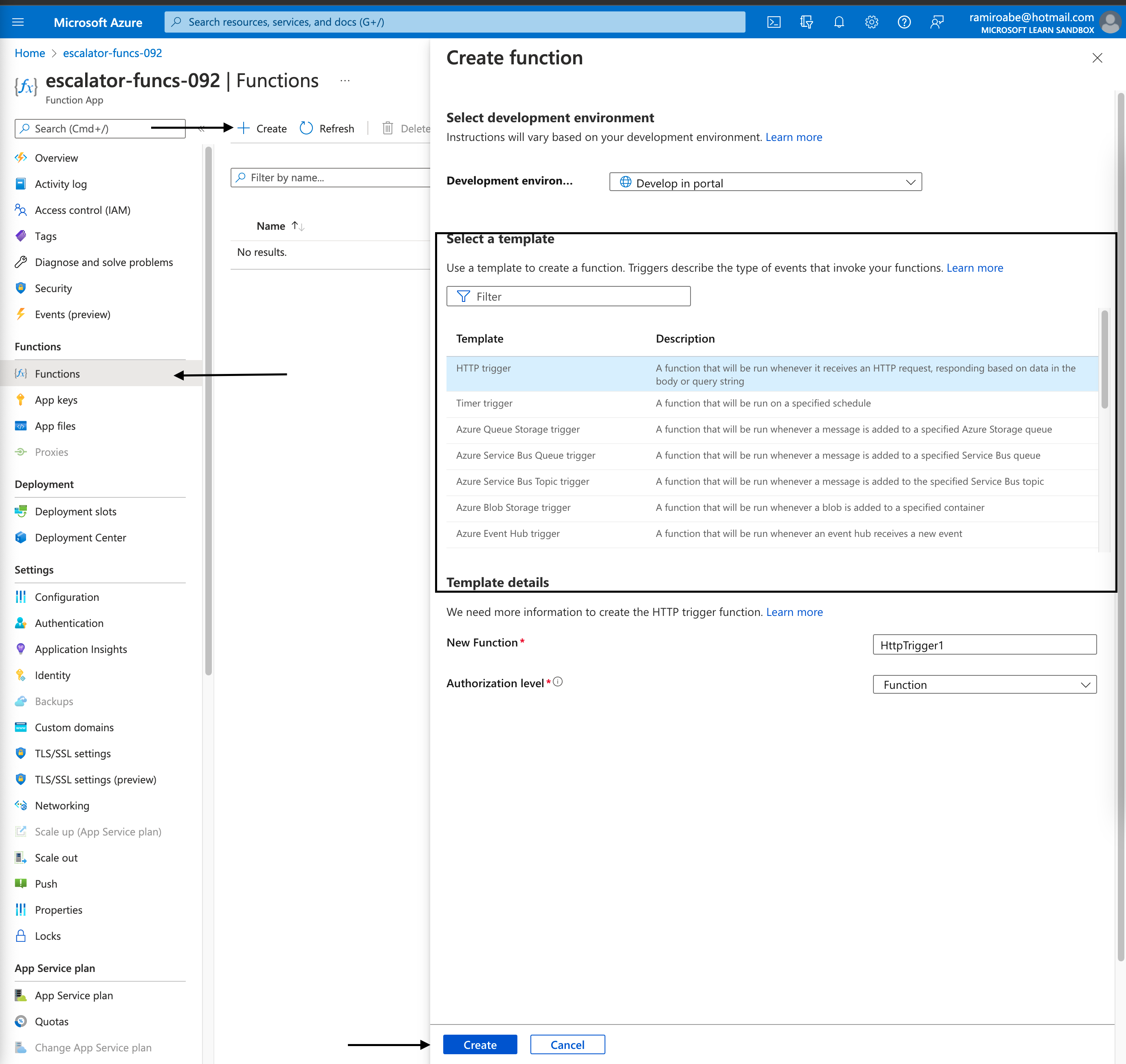

How to create a function

Under Azure Portal, select => create a resource / Compute / Function App / Create a Function App

There are several options here. But one of the important is to choose the Function App Name, which should be unique and the Runtime Stack and version. After we create the function, there are several options to configure.

- Triggers: Each function must be configured with exactly one trigger.

Several events can be used to trigger the function:

- Blob storage: When a new/updated blob is detected.

- CosmosDB: When a new/updated document is detected.

- HTTP: When a request is sent.

- Event Grid: When an event is received from the Event Grid

- Timer: Schedule.

- Microsoft Graph Events: When there is an incoming Webhook from the Microsoft Graph.

- Queue Storage: When a new item is received on a queue.

- Service Bus: When a new message is received on a topic/queue.

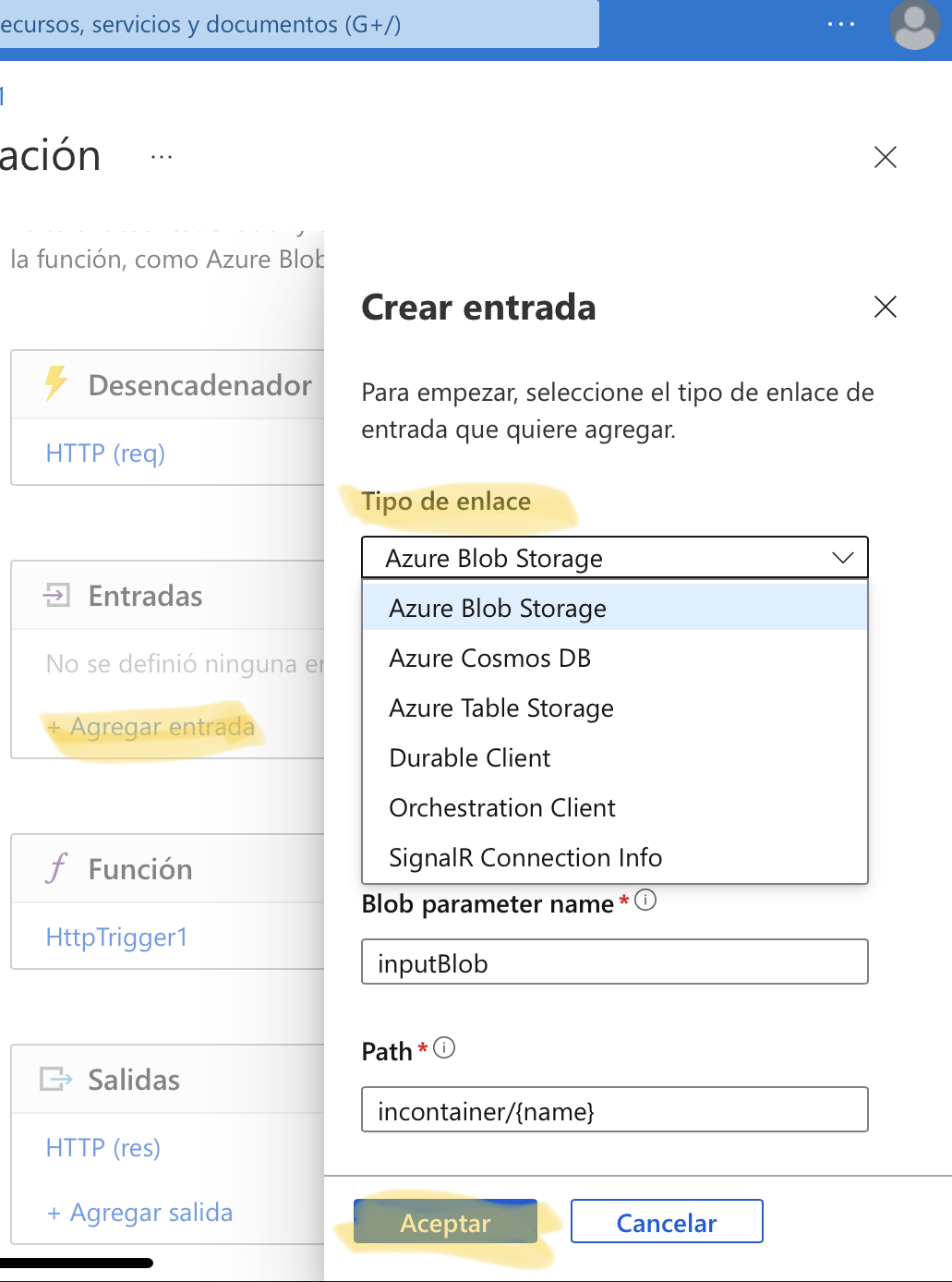

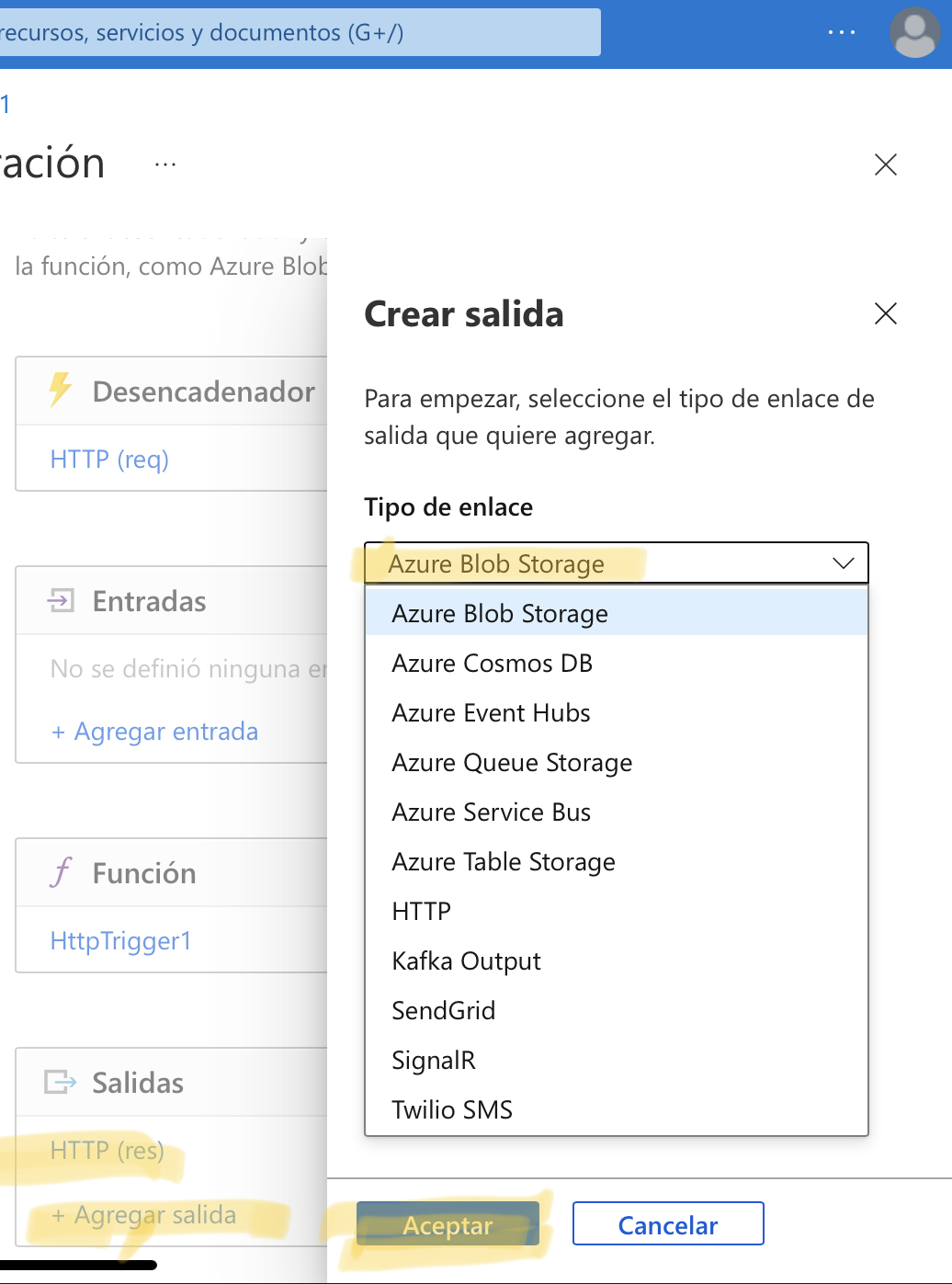

- Bindings: A binding is a declarative way to connect data and services to your function. These are the bindings supported by Azure Functions.. There are two kinds of bindings, the Input binding that allows connecting with the data source and the Output binding that allows connecting with the data destination.

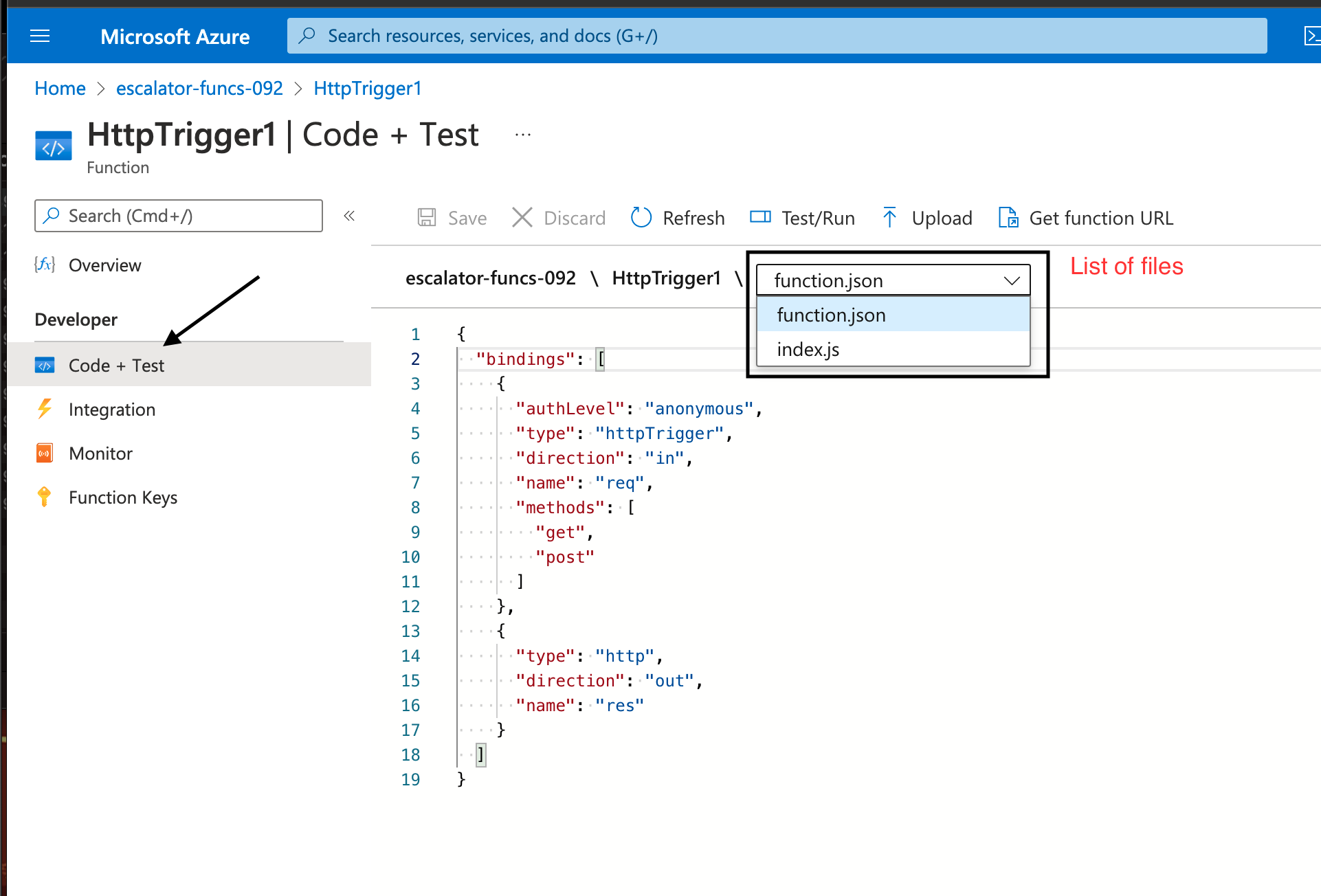

This is an example of a binding:

1 | { |

function.json

In the previous example, in the first binding, we have implemented the direction “in” for a trigger in the Storage Queue for the queueName “myqueue-items”. And, the second binding with the direction “out” will insert into the table “outTable” on Azure Table Storage. The output and input bindings can be anything, for example, instead of a table, you can send an email using the SendGrid binding or both.

When you want to create a function, follow the steps:

As you can see, there are several templates to work with. We going to select HTTP trigger.

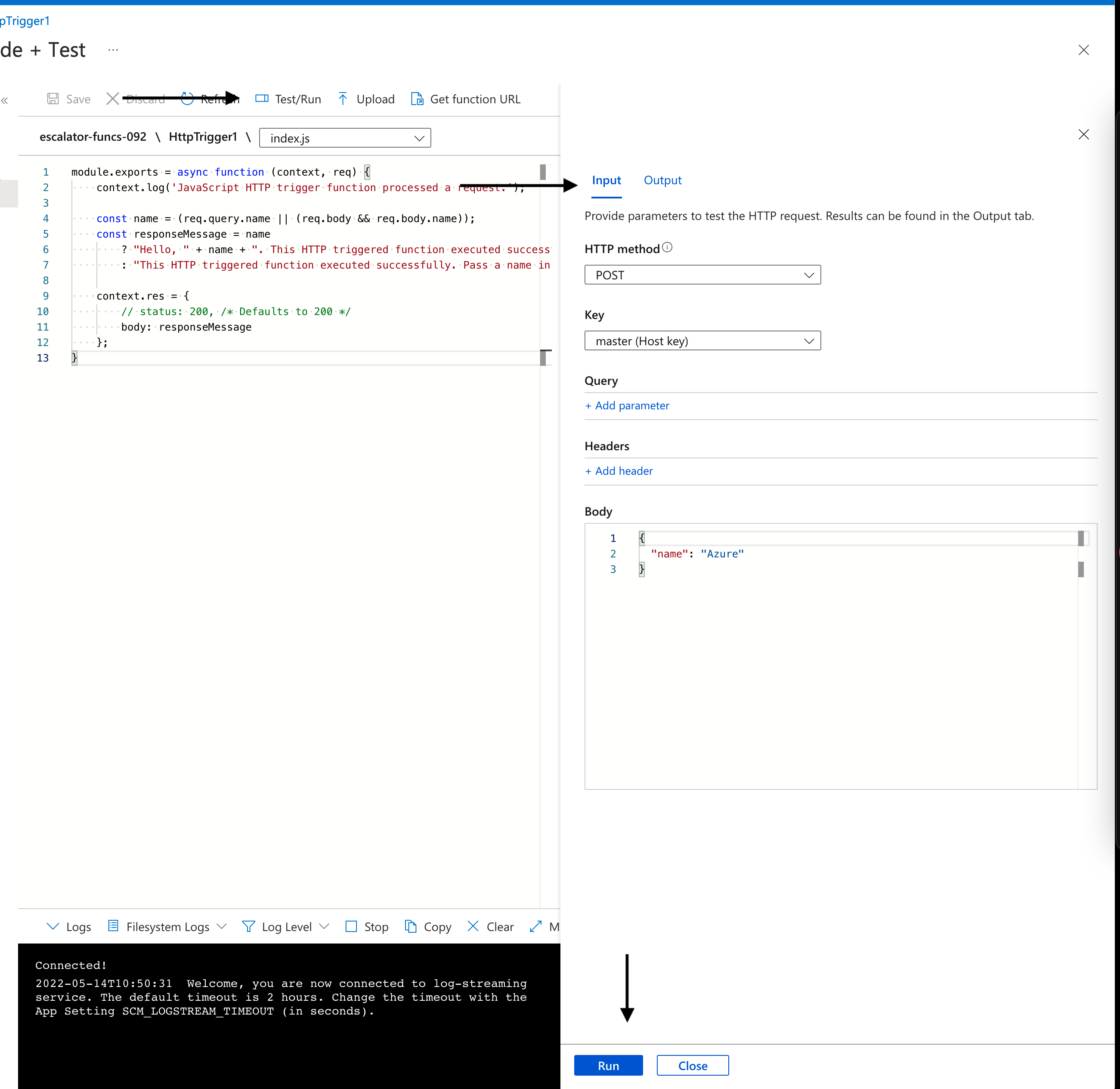

After creating the function, by default, two files are created, the function.json configuration file and the function placeholder index.js. In the code inside the index.js file, you will receive the parameter req that is the trigger binding that you configure, and the parameter res is the output binding.

Those parameters are defined in the function.json file. For the first binding with direction in, the variable was called req. For the second binding with direction out, the variable was called res.

1 | { |

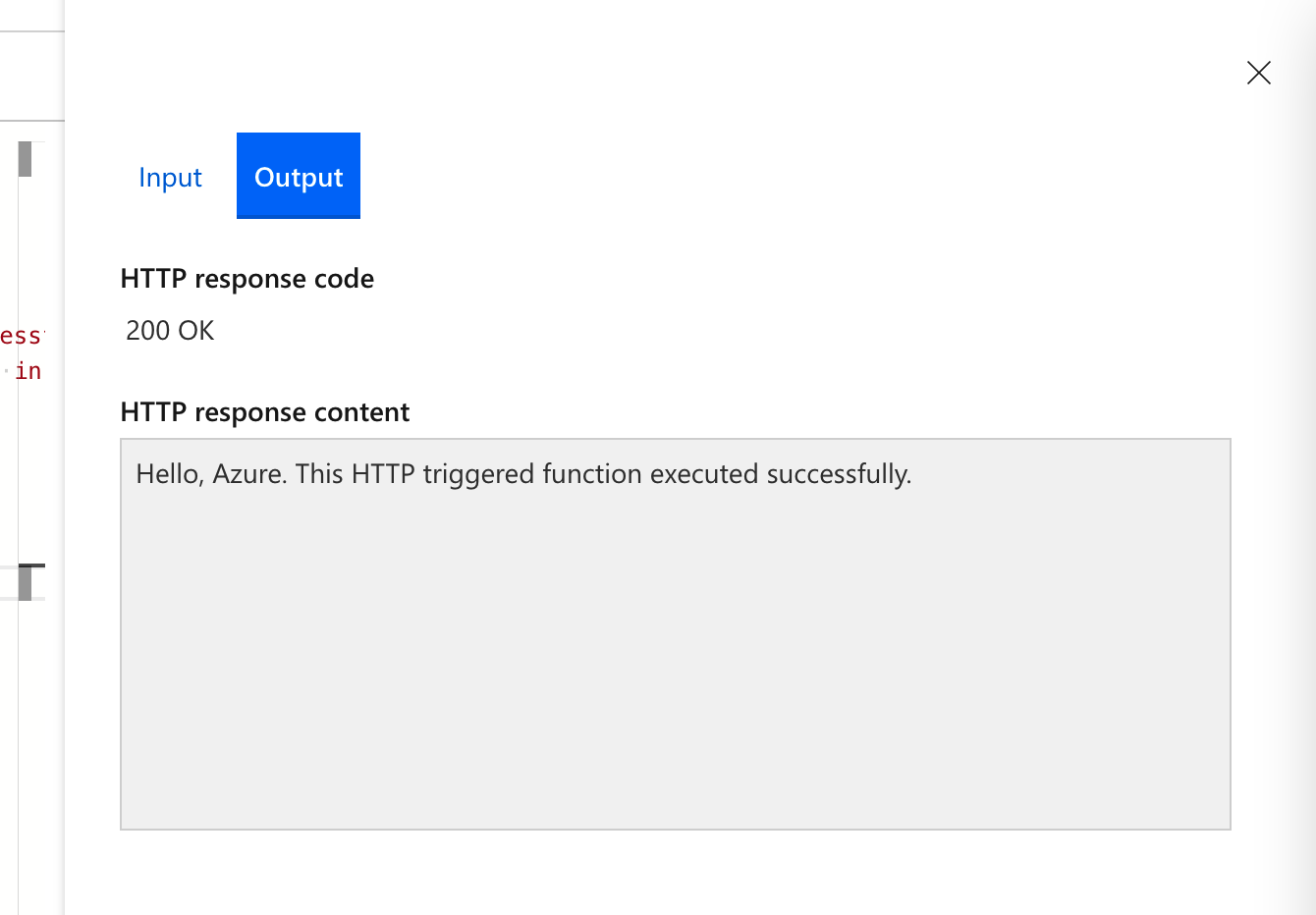

The Test/Run function can help you to test the function better. It’s like a postman for that function.

if you want to test outside, click Get Function URL button, and you will see the URL to test the function.

For monitoring the Azure function, in the function root page, search the option Application Insights and turn it on. It will give you all the monitoring information needed.

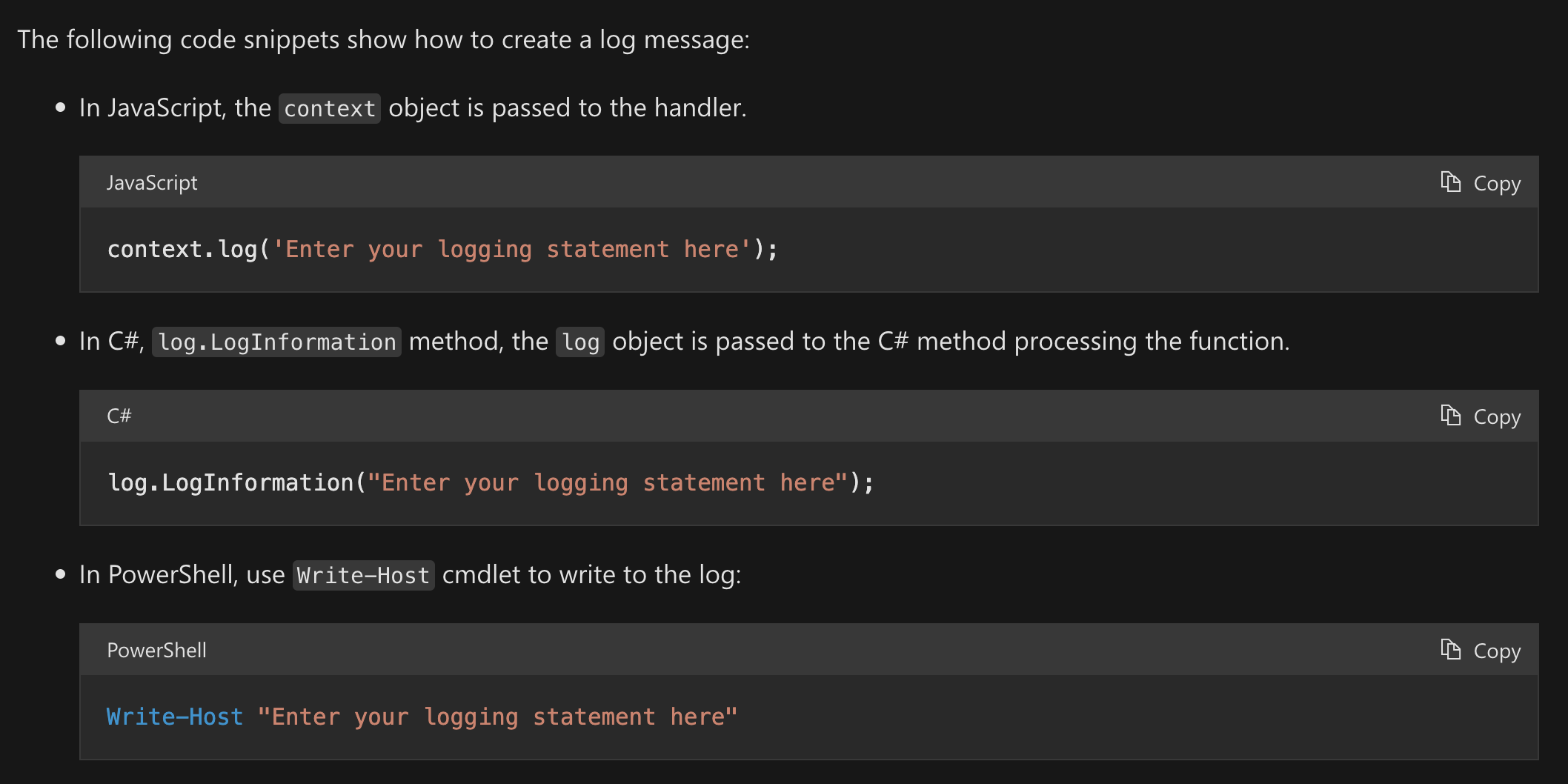

For logging the function, the context already includes some functions to log the information.

And you can see the output in the Application Insights dashboard / Monitoring / Logs.

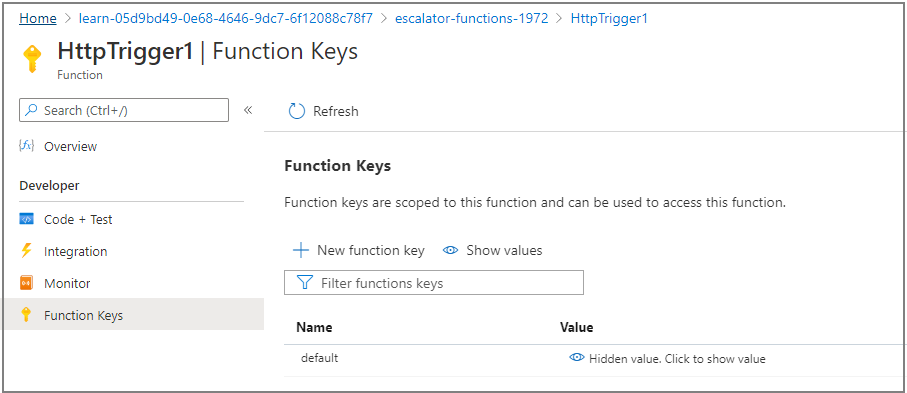

Secure HTTP triggers

There are several ways to secure the function. We already select Anonymus for the Authorization Level when we created the function.

By default, the function set Function as the default authorization level. This level requires a specific API key to execute the function. You can set it in the Function Keys section. The Admin level also requires a specific API key.

To update this, let’s go to Code + Test area, select the file function.json and change the

binding with authLevel to “function”.

After you change this authorization level, you need to supply the API key to execute the function. You can send the secret token as a query string parameter named code or as a header named x-functions-key.

The difference is their scope; the Function key is specific to a function.

Execute an Azure Function with triggers

As previously explained, the function is triggered by the event. The event can be an HTTP request, a message being added to a queue, a document being added to a CosmosDB, a blob being updated, a timer being scheduled, a Webhook from the Microsoft Graph, etc. Let’s see another example

But, the trigger is not the same as a binding. A function can have multiple input and output bindings. The bindings are optional. An input binding is the data that your function receives. An output binding is the data that your function sends.

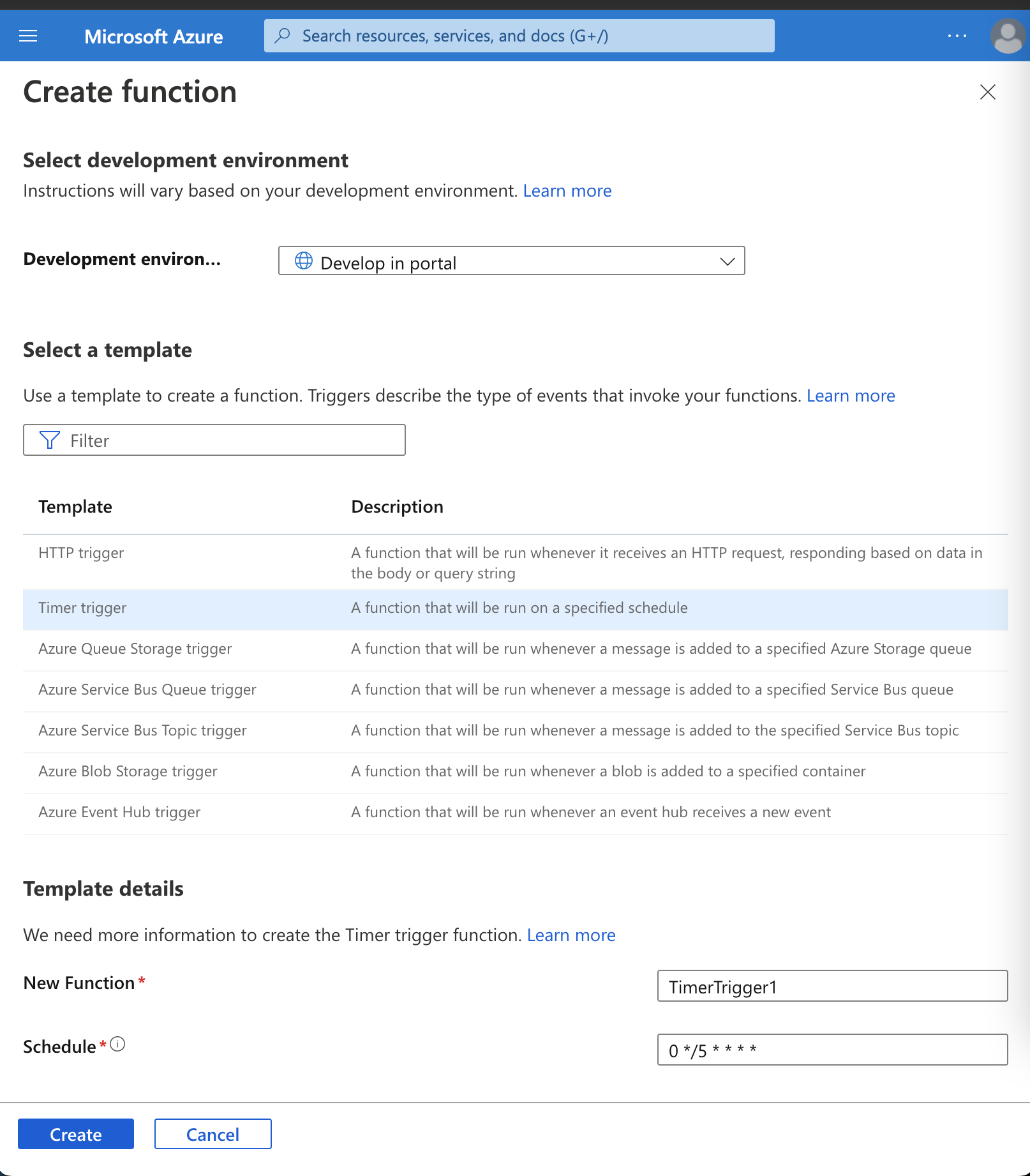

The timer trigger

Let’s say we need to execute a function every 5 minutes. We can create a timer trigger. For this, we need two things the parameter name and the Schedule CRON expression.

Here is a table for a basic understanding of the CRON expression:

{second} {minute} {hour} {day} {month} {day of the week}

| Field name | Allowed values | Allowed special characters |

|---|---|---|

| Seconds | 0-59 | , - * / |

| Minutes | 0-59 | , - * / |

| Hours | 0-23 | , - * / |

| Day of month | 1-31 | , - * / |

| Month | 1-12 or JAN-DEC | , - * / |

| Day of week | 0-6 or SUN-SAT | , - * / |

For example, a CRON expression to create a trigger that executes every five minutes looks like: 0 */5 * * * *

| Special character | Meaning | Example |

|---|---|---|

| * | Selects every value in a field | An asterisk “*“ in the day of the week field means every day. |

| , | Separates items in a list | A comma “1,3” in the day of the week field means just Mondays (day 1) and Wednesdays (day 3). |

| - | Specifies a range | A hyphen “10-12” in the hour field means a range that includes the hours 10, 11, and 12. |

| / | Specifies an increment | A slash “*/10” in the minutes field means an increment of every 10 minutes. |

Special character Table

To create the function with the trigger with the template. Select the template Timer Trigger and set the CRON expression.

The value in this parameter represents the CRON expression with six places for time precision: {second} {minute} {hour} {day} {month} {day-of-week}. The first place value represents every 20 seconds.

Execute an Azure Function with Blob Storage

If you want to execute the function when somebody uploads a new file or update, there is a trigger for that.

But, what is the Azure Storage? It is a solution to store information that supports all data types, including Blobs, queues, and NoSQL. So, inside Azure Storage, you can have Azure Blob Storage created to store files and serve them to the users. Stream video and audio and logging data. All this with Highly available, secure, scalable, and managed in mind.

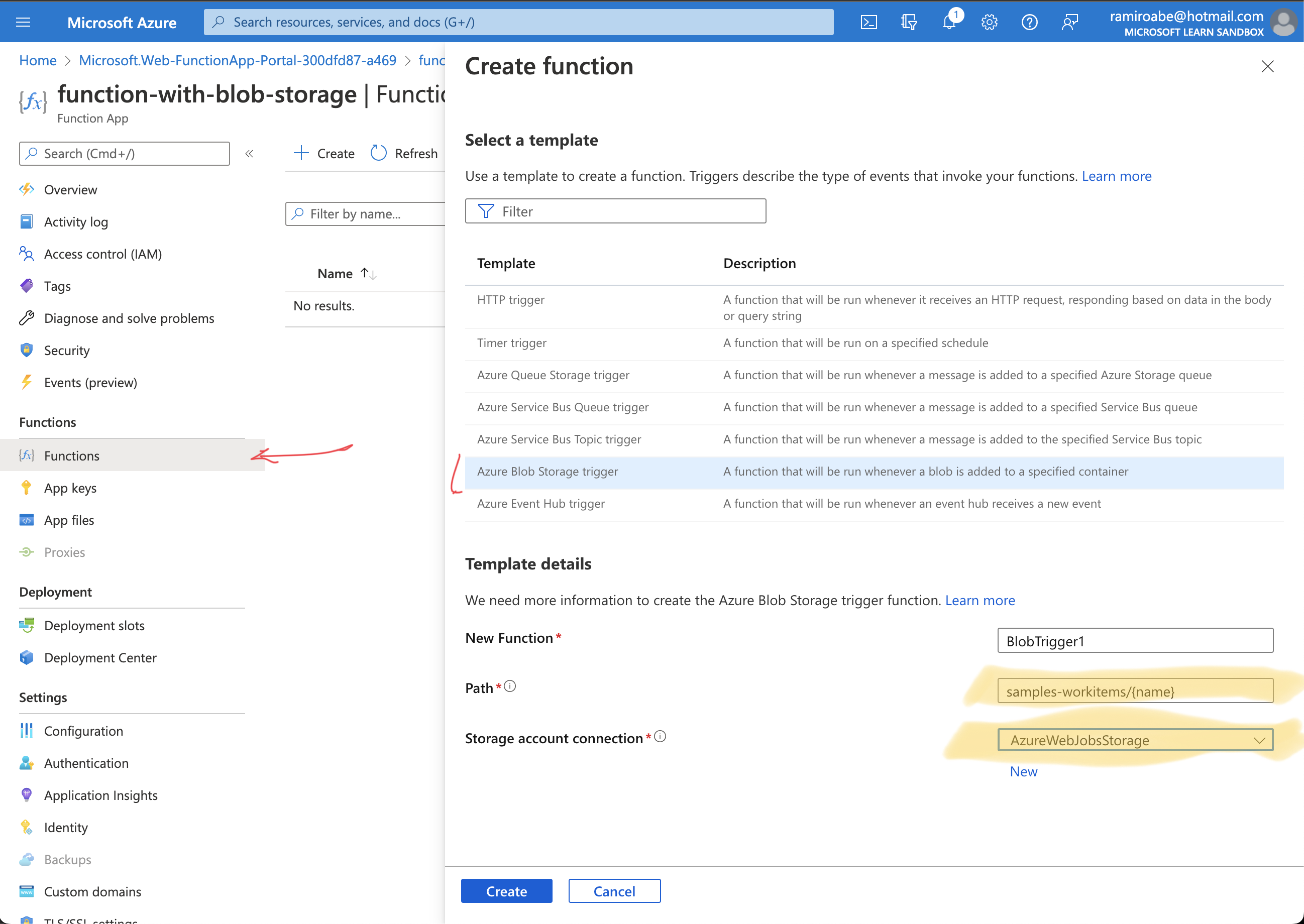

Now, Let’s create a new blob trigger. To do that, we need to create a new function and select the Azure Blob Storage trigger template. Then, we need to select the Path to the blob, and this is important because it is the Path where to monitor and see if a blob is uploaded or updated.

After that, try to upload a new file to the blob storage and see if the function was executed.

Check the binding types

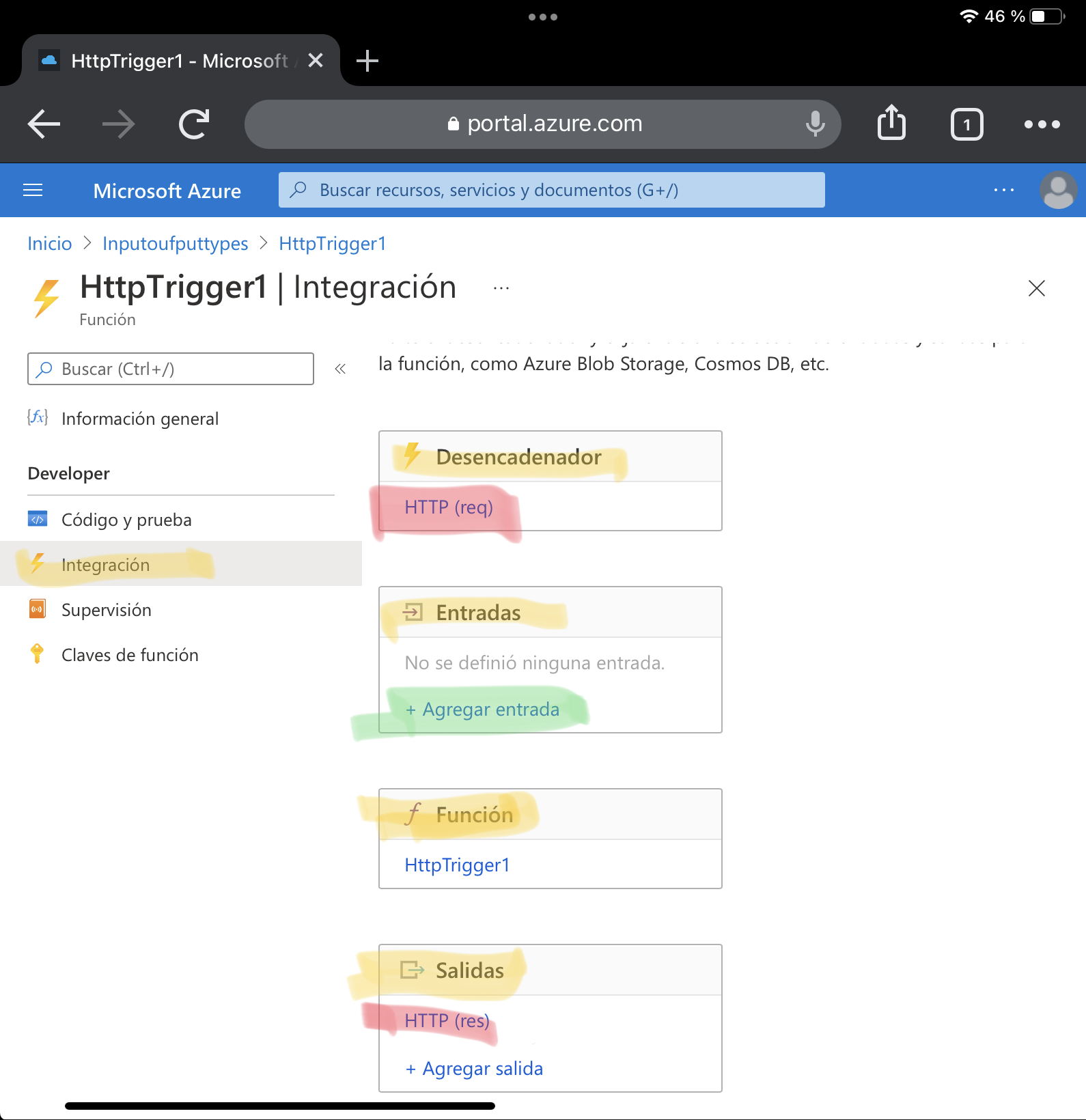

To take a look all the binding types, we can go to the Integration area, and you will see the Trigger and Outputs already created

We can not add more than one trigger to a function. So, we need to delete the trigger and create a new one if you want to change it. However, the Inputs and outputs sections are enabled to add more than one binding, so the request can accept more than one input and return more than one output value.

Search information inside a database with Azure Cosmos DB Account

Let’s see how to connect to a database from a function and read data from it.

Assume that we have a Cosmos DB and a container called Bookmarks. Let’s add a new binding type of Azure Cosmos Db, and set up the current database to point into that database and collection.

Let’s update the code and use that binding.

1 | module.exports = function (context, req) { |

After saving the script, the logs tab appears and shows us the Connected! Message. Let’s modify the function.json file in order to search by the ID

1 | { |

In the last two parts, it’s necessary to update the Id and the partitionKey

1 | "id": "{id}", |

Id: Add the Document ID that we defined when we created the Bookmarks Azure Cosmos DB container.

Partition key: Add the partition key that you defined when you created the Bookmarks Azure Cosmos DB collection. The key entered here (specified in input binding format

) must match the one in the collection.

So, when you test that function, you need to specify the **Id as a parameter; the code above will check if that collection exists in that resource and populate the variable context.bindings.bookmark without worrying about the connection with the DB and all the steps required to do that.

Output binding types

Disclaimer: Not all the outputs support both input and output bindings.

- Blob Storage - You can use the blob output binding to write blobs.

- Azure Cosmos DB - The Azure Cosmos DB output binding lets you write a new document to an Azure Cosmos DB database using the SQL API.

- Event Hubs - Use the Event Hubs output binding to write events to an event stream. You must have send permission to an event hub to write events to it.

- HTTP - Use the HTTP output binding to respond to the HTTP request sender. This binding requires an HTTP trigger and allows you to customize the response associated with the trigger’s request. This can also be used to connect to webhooks.

- Microsoft Graph - Microsoft Graph output bindings allow you to write to files in OneDrive, modify Excel data, and send email through Outlook.

- Mobile Apps - The Mobile Apps output binding writes a new record to a Mobile Apps table.

- Notification Hubs - You can send push notifications with Notification Hubs output bindings.

- Queue Storage - Use the Azure Queue Storage output binding to** write messages to a queue**.

- Send Grid - Send emails using SendGrid bindings.

- Service Bus - Use Azure Service Bus output binding to send queue or topic messages.

- Table Storage - Use an Azure Table Storage output binding to write to a table in an Azure Storage account.

- Twilio - Send text messages with Twilio.

Let’s say we are going to connect two outputs for that function, the first output is going to create into the database the object that does not exist in the Azure Cosmos DB, and the second output is going to add a new message to the Queue of the Azure Queue Storage after a new item was created in the Cosmos DB.

1 | module.exports = async function (context, req) { |

Additional resources

Although this isn’t intended to be an exhaustive list, the following are some resources related to the topics covered in this module that you might find interesting:

Azure Functions documentation

Azure Serverless Computing Cookbook

How to use Queue storage from Node.js

Introduction to Azure Cosmos DB: SQL API

A technical overview of Azure Cosmos DB

Azure Cosmos DB documentation

Create a long-running serverless workflow with Durable Functions

The durable function extends the Azure Functions that allow you to create long-lasting, stateful operations in Azure.

It allows you to implement complex stateful functions in a serverless-environment.

So, if the process has:

- Multiple steps

- Steps can have different durations.

Sometimes, those processes are complex and costly, and coordinating those steps might take effort. Some of the benefits of using Durable functions are:

- Can wait for Asynchronous code for one or more external events to complete. And then execute steps after those events have been completed.

- Chain functions together. Patterns like fan-out/fan-in can be implemented here, allowing one function to invoke others in parallel and then collect all the results.

- Orchestrate and coordinate functions in specifically designed workflows.

- State management.

There are three types of durable functions: Client, Orchestrator, and Activity.

Okay, let’s create a long-running serverless. For doing that, let’s create a new Azure Function like the previous one, but let’s go to Development Tools option and select App Service Editor. Then, open the console and create the package.json file and run the following command:

1 | npm install durable-functions |

Then, Go back to the Function App, create the function and select the Durable Functions template.

Let’s start creating the first code in the function. By default, the function came with the following code:

1 | const df = require("durable-functions"); |

TODO: Add a better example for Durable Functions. Sorry for the incomplete example D:

You can look at the Durable Functions documentation to learn more about it.

Durable Functions patterns and technical concepts

Another example with .net

The example is taken from © 2019 Scott J Duffy and SoftwareArchitect.ca, all rights reserved

I’ll create three functions:

First, the Activity, will be the business logic and final result of the orchestration. So, let’s create a new function called CalculateTax with template Durable Functions activity

1 |

|

For secondary, let’s create a new function called Conductor with the template Durable Functions orchestrator. This function is going to orchestrate several calls to the CalculateTax function.

1 | /* |

And finally, let’s create a new function called Starter with the template Durable Functions HTTP starter. This function is going to call the orchestrator with the parameter. The template code is good enough to execute the durable functions.

1 |

|

Let’s start with the execution of the functions.

curl --location --request GET 'https://test-funcapp-rb.azurewebsites.net/api/orchestrators/Conductor?code=R8GozXTET--aQrF4Y06-QT5yEFL7-GW-T28LTDpjmDHkAzFukmrYLQ==&functionName=Conductor'

1 | { |

Right now, the check the result of the execution hit the statusQueryGetUri API

1 | { |

The runtimeStatus property return Completed, and inside the output property, we have the execution result.

Develop, test, and publish Azure Functions by using Azure Functions Core Tools

All the previous steps are done through the Azure Platform.

Some useful commands:

Azure Storage account.

1 | export STORAGE_ACCOUNT_NAME=mslsigrstorage$(openssl rand -hex 5) |

Azure Cosmos DB

1 | az cosmosdb create \ |

Create Azure SignalR

1 | SIGNALR_SERVICE_NAME=msl-sigr-signalr$(openssl rand -hex 5) |

And Update an azure function with SignalR

1 | az resource update \ |

Get the Connection string for all resources created

1 |

|

Use a storage account to host a static website

When you copy files to a storage container named $web, those files are available to web browsers via a secure server using the

https://<ACCOUNT_NAME>.<ZONE_NAME>.web.core.windows.net/<FILE_NAME>URI scheme.

Azure API Management

You can use Azure Functions and Azure API Management to build complete APIs with a microservices architecture.

Microservices focused on creating many small services, separated by domain responsibility. Each service is independent of others, developed, deployed, and scaled independently.

As a complementary is the serverless architecture, a model that allows to develop and deploy applications in a cloud environment. This architecture only costs when the service receives traffic.

The Azure API management it’s an easy way to assemble several functions into a single API, used in a serverless architecture. Provides tools to publish, secure, transform, manage, and monitor APIs.

Enables you to create and manage modern API gateways for existing backend services no matter where they’re hosted.

In the following steps, you’ll add an Azure Function app to Azure API Management. Later, you’ll add a second function app to the same API Management instance to create a single serverless API from multiple functions. Let’s start by using a script to create the functions:

1 | git clone https://github.com/MicrosoftDocs/mslearn-apim-and-functions.git ~/OnlineStoreFuncs |

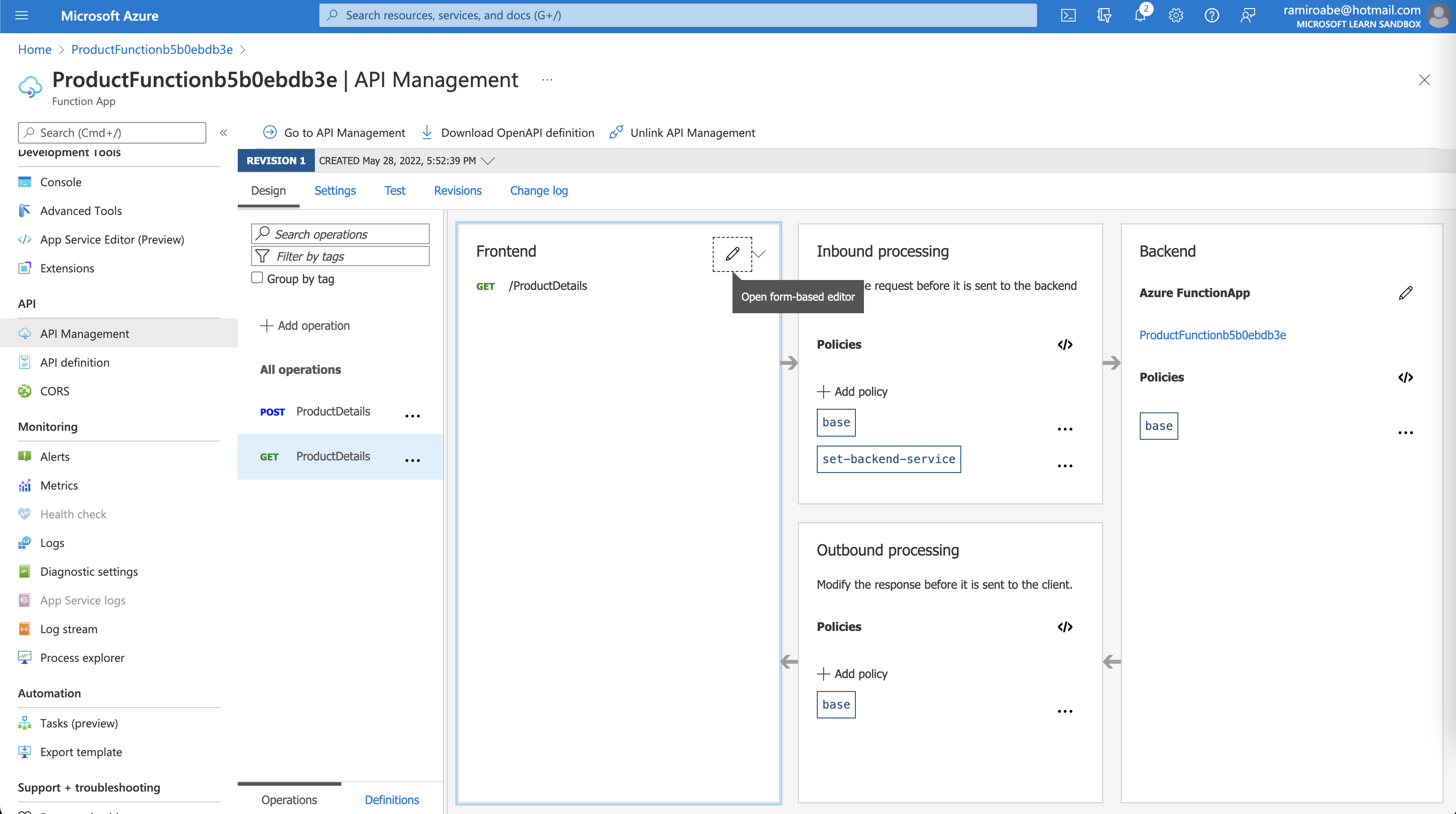

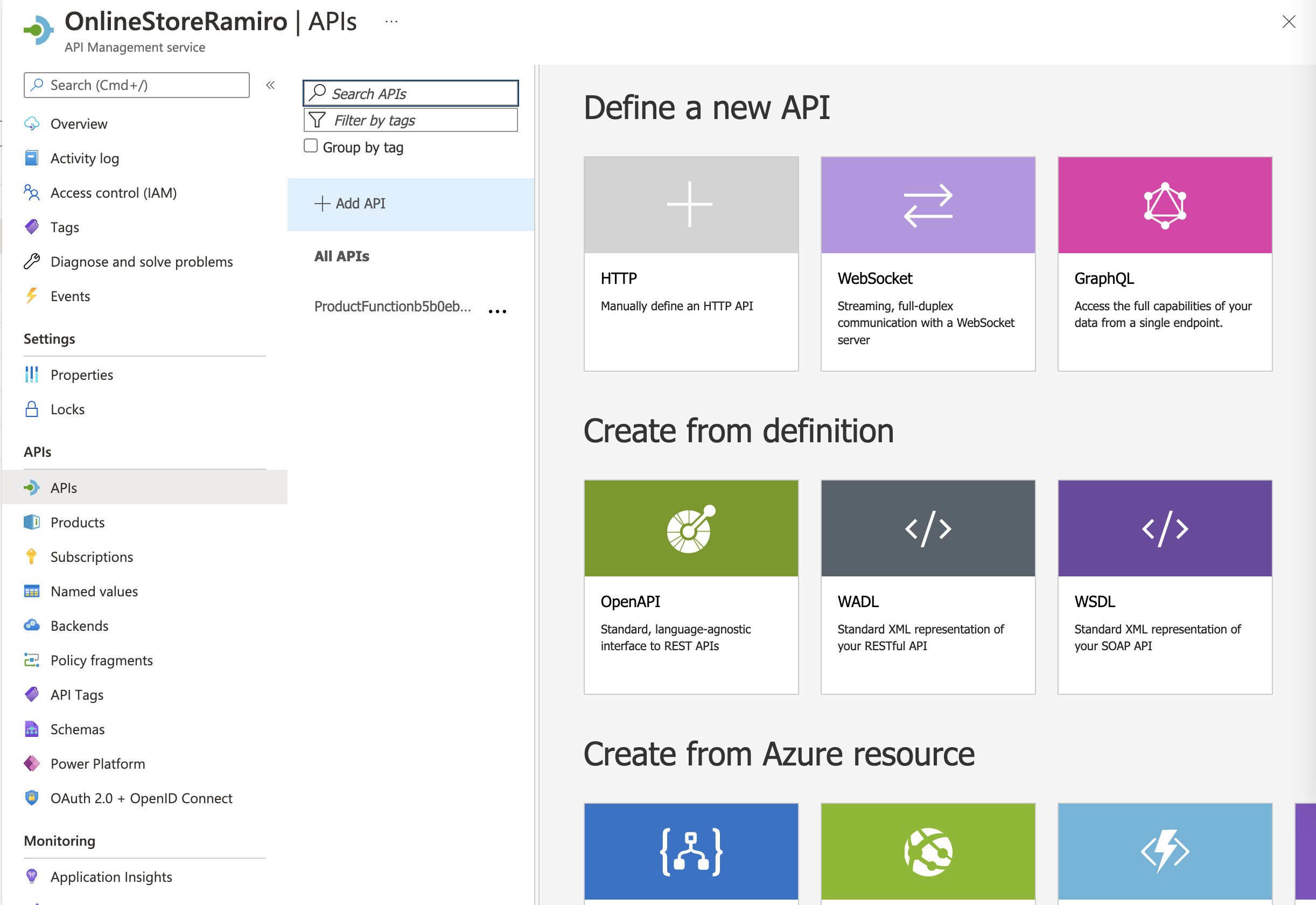

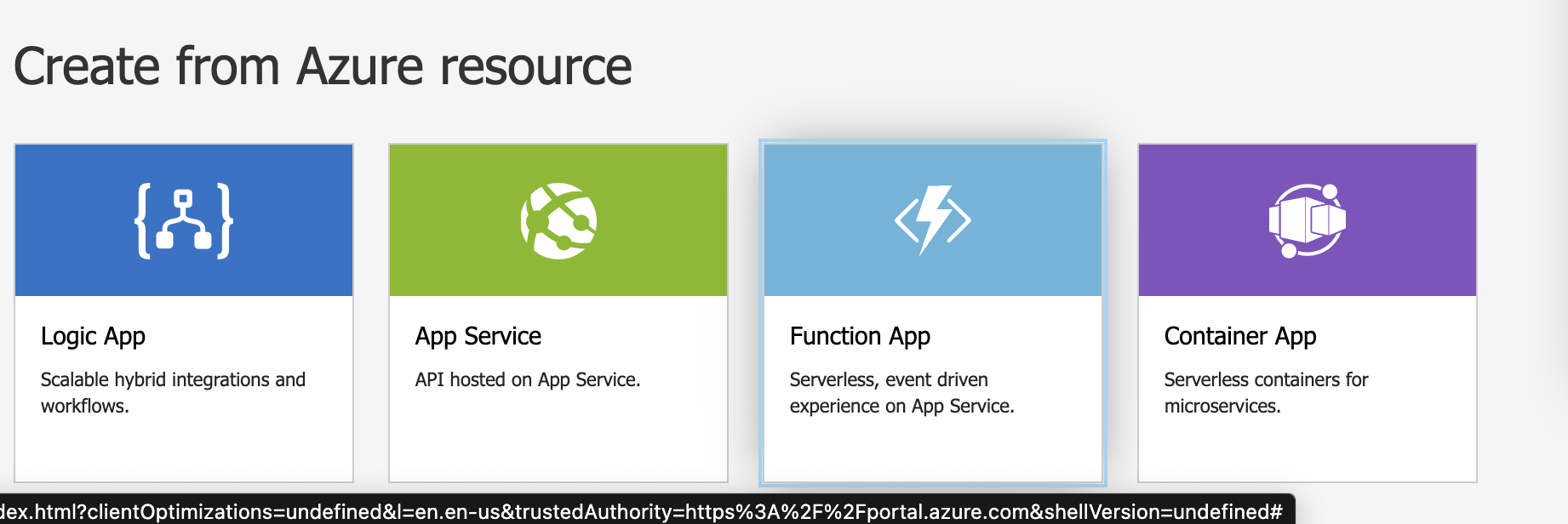

OrderDetails and ProductDetails are the functions created. Inside each function, there is an option called API Management; hit Create new and set the required values.

After the resource is created, inside the function/API Management section, you can see the message “Your App is linked to the API management instance ‘OnlineStore’”. So, select Link API, select the functions highlighted in the list, and then Azure will show you the Create from function App, where you can set the API URL suffix and create it.

1 | curl --location --request GET 'https://onlinestoreramiro.azure-api.net/products/ProductDetails?id=1' \ |

- Client apps are coupled to the API expressing business logic, not the underlying technical implementation with individual microservices. You can change the location and definition of the services without necessarily reconfiguring or updating the client apps.

- API Management acts as an intermediary. It forwards requests to the right microservice, wherever it’s located, and returns responses to users. Users never see the different URIs where microservices are hosted.

- You can use API Management policies to enforce consistent rules on all microservices in the product. For example, you can transform all XML responses into JSON, if that is your preferred format.

- Policies also enable you to enforce consistent security requirements.

API Management tools:

- Test each microservice.

- Monitor the behavior and performance of deployed services.

- Importing Azure Function Apps as new APIs or appending them to existing APIs.

- Etc…

You can add several types of functions to API Management

.

.The Custom Handlers in Azure Functions

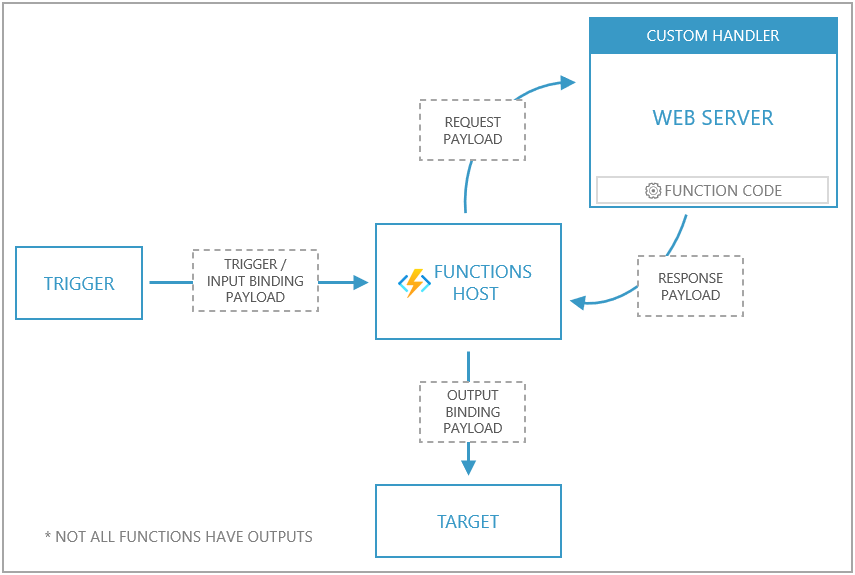

When you build a new Azure Function and use one of the language runtimes not supported by default in your code, you can implement a custom handler.

The customer handler is a web server that receives events from the Functions host. It supports all the programming languages that can support HTTP primitives.

The Azure functions contain three central concepts:

- Triggers: It is an event that runs the function. It can be an HTTP request, timer, or other.

- Bindings: It is the helper that connects the function to another service. The bindings can be input and output.

- Functions Host: It controls the application event flow. As the host captures events, it invokes the handler and is responsible for returning a function’s response.

The following actions describe how a request is processed through the Functions host and a custom handler:

- When an event occurs that matches a trigger (for example, an HTTP request),** a request is sent to the Functions host**.

- The Functions host creates a request payload and sends that to the web server (custom handler). The payload contains information on the trigger, input binding data, and other metadata.

- The function executes your logic, and a response is sent back to the Functions host.

- The Functions host passes outgoing data to a function’s output binding for processing.

So, let’s take a look at the steps. In VSCode, under Command Palette select Azure Functions: Create new project; in language select Custom Handler, then select the template, in this case HttpTrigger and name the app. The AZ Scaffold app will create several files and folders. Let’s go with the custom app, generate the code that exposes an HTTP server, and after you build the app, update the host.json file with the following configuration:

1 | "customHandler": { |

and run func start to check everything.

Resources

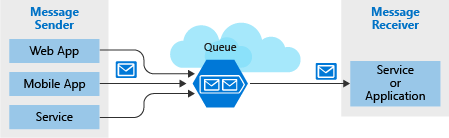

Choose a messaging model in Azure to loosely connect your services

Having distributed applications can refer to having several applications running on different computers or devices. To connect them, you can use a messaging model.

The first thing to understand about a communication is whether it sends messages or events.

- Messages are sent by one application to another.

- Contains raw data not just a reference to that data

- Produced by sender

- Consumed by receiver

- Events are sent by one application to many other applications. The components sending the event are known as publishers, and receivers are known as subscribers.

- Lightweight notification

- Does not contain raw data

- May reference where the data lives

- Sender has no expectations

Azure provides several services for that purpose:

- Azure Queue Storage: It is a service to store messages in a securely managed queue. It can access with a REST API.

- Azure Service Bus: This one is more enterprise-oriented. It’s a message broker system that allows multiple communications protocols and security layers. It can use under a cloud or on-premises environment.

- Azure Event Hubs

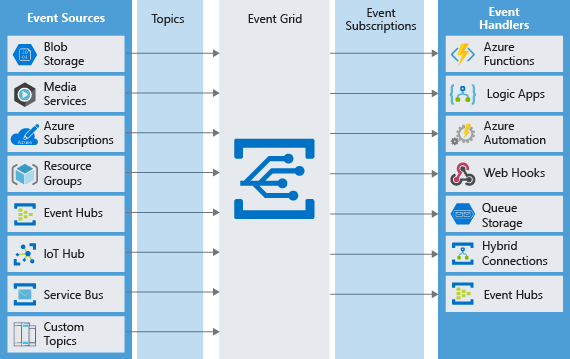

- Azure Event Grid: It’s a easy to configure provider to connect Azure Services from different event sources to several event handlers.

Well, how to choose messages or events?

Events are more likely to be used for broadcasts and are often ephemeral, meaning a communication might not be handled by any receiver

Messages are more likely to be used where the distributed application requires a guarantee the communication will be processed.

Azure Queue Storage and Azure Service Bus are based on the same idea of a queue. Holds a message until the receiver processes it. But queue storage is less sophisticated than service bus; let’s check some benefits of the Service Bus**:

- Service Bus supports messages up to 256KB in size or 100MB under the premium tier versus the 64KB for Storage Queue.

- Can group multiple messages into one transaction.

- Role-based security.

- Receive messages without polling the queue.

Otherwise, the Queue Storage can handle:

- Supports unlimited queue size (versus 80GB limit for Service Bus queues).

- Maintains a log of all messages.

The Azure Service Bus

With Azure Service Bus, you can exchange messages in two ways: Topics and queues.

With Topics: The difference between a topic and a queue is that a topic is a publisher and a queue is a subscriber. So, multiple receivers can subscribe to specific topics, and each topic can have multiple subscribers.

Internally, topics use queues. When you post to a topic, the message is copied and dropped into the queue for each subscription. The queue means that the message copy will stay around to be processed by each subscription branch even if the component processing that subscription is too busy to keep up.

With Queues, you will have a simple, temporary storage location for messages sent between the components of a distributed application. Each message is received by only one receiver. Destination components will remove messages from the queue as they are able to handle them.

Using Service Bus Queues, it can support many advanced features like:

- Increase reliability

- Message delivery guarantees

- At-least-once Delivery

- At-Most-Once Delivery

- First-in-First-Out (FIFO)

- Transactional Support

Use Service Bus queues if you:

- Need an At-Most-Once delivery guarantee.

- Need a FIFO guarantee.

- Need to group messages into transactions.

- Want to receive messages without polling the queue.

- Need to provide a role-based access model to the queues.

- Need to handle messages larger than 64 KB but less than 100 MB. The maximum message size supported by the standard tier is 256 KB, and the * premium tier is 100 MB.

- Queue size will not grow larger than 1 TB. The maximum queue size for the standard tier is 80 GB, and for the premium tier, it’s 1 TB.

- Want to publish and consume batches of messages.

- Be able to respond to high demand without needing to add resources to the system.

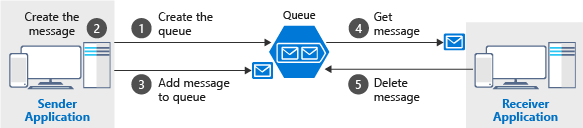

Likewise, the Queue Storage it’s a simple and easy-to-code queue system. It can be used when you need to audit all the messages passed through the queue, big queue size (> 1TB), and track progress inside the queue. For more advanced needs, use Service Bus queues. Here a diagram of Azure Queue Storage:

Workflow:

Note: Notice that get and delete are separate operations. This arrangement handles potential failures in the receiver and implements a concept called at-least-once delivery. After the receiver gets a message, that message remains in the queue but is invisible for 30 seconds. If the receiver crashes or experiences a power failure during processing, then it will never delete the message from the queue. After 30 seconds, the message will reappear in the queue and another instance of the receiver can process it to completion.

CLI commands for Azure Queue Storage

1 | az storage account create |

export STORAGE_CONNECTION_STRING=az storage account show-connection-string -g learn-b8b3bede-032b-4c9d-90e7-873af3d4d4ff -n imramiroqueues --output tsv

echo $STORAGE_CONNECTION_STRING

Nuget package for Azure Queue Storage

Azure.Storage.Queues

Example

cd ~

git clone https://github.com/MicrosoftDocs/mslearn-communicate-with-storage-queues.git

1 | QueueClient queueClient = new QueueClient(connectionString, queueName); |

NuGet package for Azure Service Bus

Azure.Messaging.ServiceBus

Microsoft provides a library of .NET classes that you can use in any .NET language to interact with a Service Bus queue or topic. The library is available in the Microsoft Nuget package.

When you send a message to a queue, for example, use the SendMessageAsync method with the await keyword.

To send a message to a queue

1 | // Create a ServiceBusClient object using the connection string to the namespace. |

To receive messages from a queue

1 | // Create a ServiceBusProcessor for the queue. |

1 | // After you complete the processing of the message, invoke the following method to remove the message from the queue. |

Useful commands

Example: git clone https://github.com/MicrosoftDocs/mslearn-connect-services-together.git

1 |

|

Implementation details

https://docs.microsoft.com/en-us/learn/modules/implement-message-workflows-with-service-bus/5-exercise-write-code-that-uses-service-bus-queues

The Azure Event Grid

It’s an event routing service running on top of Azure Service Fabric. It’s distributed events from different sources like:

- Azure Blob Storage

- Azure Media Services

- IoT Hubs

- Azure Service Bus

- Etc

To:

- Azure Functions

- Webhooks

- Queue Storage

- Etc

Azure Event Grid can be used when you need, Simplicity (Easy to connect), Advanced filtering (Close Control over events they receive from a topic), Fan-out (Unlimited number of subscribers), Reliability (Retry for each subscription), and Pay-per-event (Only pay for events delivered).

Azure Event Grid has been composed of several parts:

- Events: What happened. The events are the data messages passing through Event Grid. Up to 64 KB in size.

1 | [ |

- Event sources: Where the event took place. Those are responsible for sending events to Event Grid. The event source is the specific service generating the event for that publisher. For Example, Azure Storage is the event source for blob-created events. IoT Hub is the event source for device-created events.

- Topics: The endpoint where publishers send events.

- Event subscriptions: The endpoint or built-in mechanism to route events, sometimes to multiple handlers. Subscriptions are also used by handlers to filter incoming events intelligently.

- Event handlers: The app or service reacting to the event. Sometimes called the subscriber. It’s pretty much every application that supports Event Grid can receive events. No polling is required.

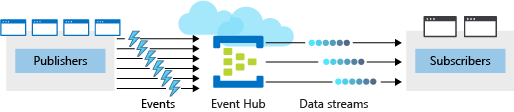

Azure Event Hubs

Event Hubs is an intermediary for the publish-subscribe communication pattern. Unlike Event Grid, however, it is optimized for extremely high throughput, a large number of publishers, security, and resiliency. You can pipeline events streams to other Azure services, like Azure Stream Analytics which allows you to process complex data streams.

For example, if you had networked sensors in your manufacturing warehouses, you could use Event Hubs coupled with Azure Stream Analytics to watch for patterns in temperature changes that might indicate an unwanted fire or component wear.

In the previous diagram, you can see several players. The Publisher is the entity that sends the event; this can be any app or device that can send out events using HTTPS, AMQP or Apache Kafka. The Subscriber is the entity that receives the event. The event needs to be small, < 1 MB.

There are three main components in Event Hubs:

Partitions: A partition is a buffer for events. Each partition has a separate set of subscribers The number of partitions required in an event hub (between 2 and 32 for the standard tier). The partition count should be directly related to the expected number of concurrent consumers and can’t be changed after the hub has been created. The partition separates the message stream so that consumer or receiver apps only need to read a specific subset of the data stream. If not defined, the value defaults to 4.

Capture: Event Hubs can send all your events immediately to Azure Data Lake or Azure Blob storage for inexpensive, permanent persistence.

Authentication: All publishers are authenticated and issued a token.

Create and Configure an event Hub

There are two main steps to creating a new event hub. The first step is to define the Event Hubs namespace. The second step is to create an event hub in that namespace.

Choose Event Hubs if:

- You need to support authenticating a large number of publishers.

- You need to save a stream of events to Data Lake or Blob storage.

- You need aggregation or analytics on your event stream.

- You need reliable messaging or resiliency.

Commands

Git Repo

git clone https://github.com/Azure/azure-event-hubs.git

1 | > NS_NAME=ehubns-$RANDOM |

Create a storage account

| Command | Description |

|---|---|

storage account create | Create a general-purpose V2 Storage account. |

storage account key list | Retrieve the storage account key. |

storage account show-connection-string | Retrieve the connection string for an Azure Storage account. |

storage container create | Creates a new container in a storage account. |

1 | > STORAGE_NAME=storagename$RANDOM |

Implement message-based communication workflows with Azure Service Bus

- Choose whether to use Service Bus queues or topics to communicate in a distributed application.

- Configure an Azure Service Bus namespace in an Azure subscription.

- Create a Service Bus topic and use it to send and receive messages.

- Create a Service Bus queue and use it to send and receive messages.

Decide between messages and events

Both messages and events are datagrams: data packages sent from one component to another. They’re different in ways that initially seem subtle, but the differences can make significant differences in how you architect your application.

Messages: The message contains the actual data and not the data reference like an ID or a URL. It’s important to avoid sending too much data. The messaging architecture guarantees delivery of the message, and because no additional lookups are required, the message is reliably handled. Sometimes data contract is required between sender and receiver.

Events: An event triggers a notification that something has occurred. Events are “lighter” than messages and are most often used for broadcast communications.

Events have the following characteristics:

- The event may be sent to multiple receivers, or to none at all.

- Events are often intended to “fan out,” or have a large number of subscribers for each publisher.

- The publisher of the event has no expectation about the action a receiving component takes.

Service Bus is designed to handle messages. If you want to send events, you would likely choose Event Grid.

Conclusions

If your requirements are simple, if you want to send each message to only one destination, or if you want to write code as quickly as possible, a storage queue may be the best option. Otherwise, Service Bus queues provide many more options and flexibility.

If you want to send messages to multiple subscribers, use a Service Bus topic.

Data Storage

There is no silver bullet in terms of storage. You can have several data types like product catalog data, media files like photos and videos, and financial business data. You need to determine the goal of your data, what you want to use it, and how you can get the best performance for your application.

Data can be classified in one of the following ways:

- structured

- semi-structured

- unstructured

Structured Data

Structured data refers to relational data that is organized in a table-like structure with schemas. You can search, enter and analyze data quickly with query languages such as SQL (Structured Query Language).

Semi-Structured Data

Semi-structured data refers to data non-relational or NoSQL data. Data contains tags that make the organization and hierarchy of the data apparent; for example - key-value pairs. It’s importante the serialization of data to store. Common formats are XML, JSON and Yaml.

Unstructured Data

Unstructured data refers to files such as photos or videos.

Storage accounts let you create a group of data management rules and apply them all at once to the data stored in the account: blobs, files, tables, and queues.

A single Azure subscription can host up to 250 storage accounts per region, each of which has a maximum storage account capacity of 5 PiB.

Data types in Azure storage services

- Blobs: A massively scalable object store for text and binary data. Can include support for Azure Data Lake Storage Gen2.

- Files: Managed file shares for cloud or on-premises deployments. So, you can have a Server Message Block (SMB) under a highly available file share network.

- Queues: A messaging store for reliable messaging between application components.

- Table Storage: A NoSQL store for schema-less storage of structured data. Table Storage is not covered in this module.

Kinds of blobs

| Blob Type | Description |

|---|---|

Block blobs | Block blobs are used to hold text or binary files up to ~5 TB. The primary use case for block blobs is the storage of files that are read from beginning to end, such as media files or image files for websites. |

Page blobs | Page blobs are used to hold random-access files up to 8TB. The primary use as the backing storage for the VHDs in the Azure Virtual Machines |

Append blobs | Append blobs are made up of blocks like block blobs, but they are optimized for append operations. Like Logging information |

The storage account has the following options: Premium_LRS, Standard_GRS, Standard_LRS, Standard_RAGRS, and Standard_ZRS.

Useful commands for Azure storage services

1 | > dotnet new console --name PhotoSharingApp |

Connect and create container

1 | BlobContainerClient container = new BlobContainerClient(connectionString, containerName); |

Upload a file into blob storage

1 | string blobName = "docs-and-friends-selfie-stick"; |

Get list of blobs in a storage account

1 | string containerName = "..."; |

Security inside Azure Storage

All data inside is automatically encrypted by the Storage Service Encryption (SSE) with a 256-bit AES cipher. When you read Azure Storage data, it decrypts the data before returning it.

For VHDs, Azure uses Azure Disk Encryption. This encryption uses BitLocker for Windows images and dm-crypt for Linux images.

When you enable transport-level security, it will always use HTTPS to secure communication with the internet. Also, enabling cross-domain allows GET requests down to specific domains.

Every request to a secure resource must be authorized. Azure Storage supports Azure Active Directory and role-based access control (RBAC) for both resource management and data operations. Also, you can use a Shared key; it supports blobs, files, queues, and tables. The client embeds the shared key in the HTTP Authorization header of every request, and the Storage account validates the key.

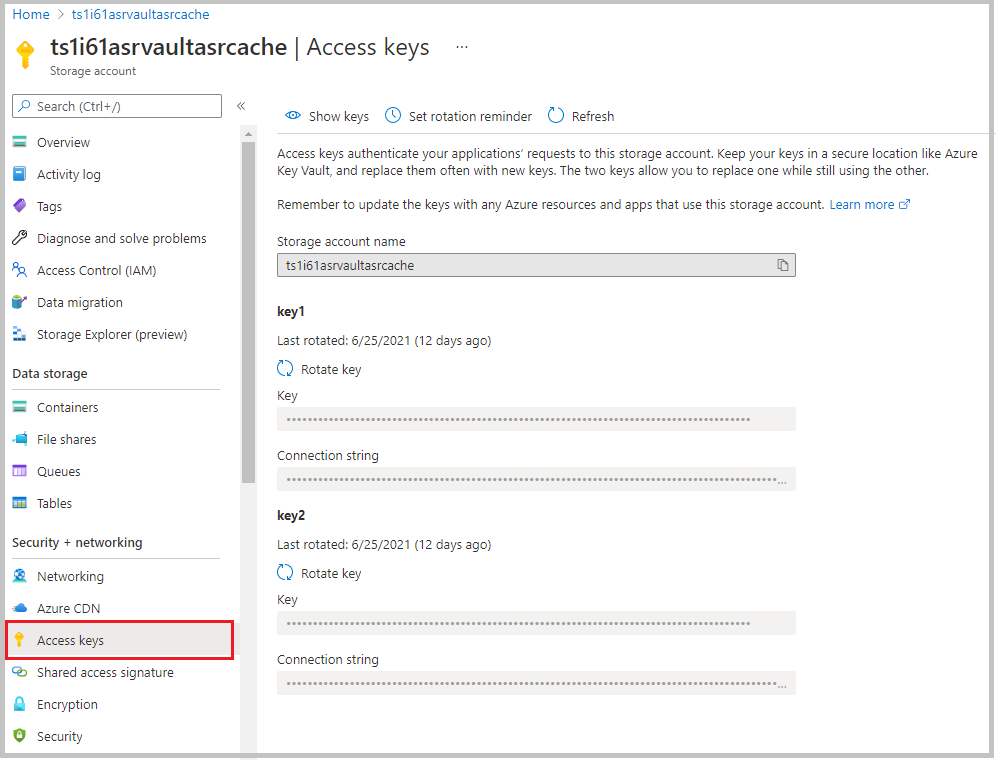

You can get the Access keys under the following page:

The storage account has only two keys, and they provide full access to the account. Because these keys are powerful, use them only with trusted in-house applications that you control completely.

For untrusted clients, use a shared access signature (SAS). A SAS is a string that contains a security token that can be attached to a URI. Use a SAS to delegate access to storage objects and specify constraints, such as the permissions and the time range of access.

Also, you can audit the data that is being accessed by using the built-in Storage Analytics service.

There are several types of shared access signatures:

- Service-level SAS: To allow access to specific resources in a storage account. For example, to allow an app to retrieve a list of files in a file system, or to download a file.

- Account-level SAS: All the service-level SAS can allow, plus additional resources and abilities. For example, you can use an account-level SAS to allow the ability to create file systems.

Microsoft Defender for Storage is currently available for Blob storage, Azure Files, and Azure Data Lake Storage Gen2. You can turn on Microsoft Defender for Storage in the Azure portal through the configuration page of the Azure Storage account or in the advanced security section of the Azure portal. Follow these steps. Navigate to your storage account. Under Security + Networking, select Security. Select Enable Microsoft Defender for Storage.

Let’s check some security anomalies

- Nature of the anomaly

- Storage account name

- Event time

- Storage type

- Potential causes

- Investigation steps

- Remediation steps

- Email also includes details about possible causes and recommended actions to investigate and mitigate the potential threat

Additional resources

- Azure Storage Services REST API Reference

- Using shared access signatures to provide limited access to a storage account

- Using Azure Key Vault Storage Account Keys with server apps

- Source code for the .NET Azure SDKs

- Azure Storage Client Library for JavaScript

Store application data with Azure Blob storage

The storage accounts are used to separate costs and control access to data. If you want to add additional separation, you should use containers and blobs to organize your data.

By default, blobs require authentication to access. But you can configure an individual container to allow public download of their blobs without authentication. This can be useful for storing static files, such as images, videos, and other files that are not sensitive to the user.

Containers are “flat”, but inside the containers, you can create your files with names that look like a file path finance/budgets/2017/q1.xls, but in the end, that is the filename. Those file namings are called virtual directories and many tools, and client libraries use them to visualize the files as a file system. Very useful for navigating complex blob data.

There are three types of blobs:

- Block blobs are composed of blocks of different sizes that can be uploaded independently and in parallel. Writing to a block blob involves uploading data to blocks and committing them to the blob.

- Append blobs are specialized block blobs that support only appending new data (not updating or deleting existing data), but they’re very efficient at it. Append blobs are great for scenarios like storing logs or writing streamed data.

- Page blobs are designed for scenarios that involve random-access reads and writes. Page blobs are used to store the virtual hard disk (VHD) files used by Azure Virtual Machines, but they’re great for any scenario that involves random access.

1 | > az storage account create \ |

Add into dotnet core application

1 | > dotnet add package Azure.Storage.Blobs |

git clone https://github.com/MicrosoftDocs/mslearn-store-data-in-azure.git

1 |

|

The stream-based upload code shown here is more efficient than reading the file into a byte array before sending it to Blob Storage. However, the ASP.NET Core IFormFile technique you use to get the file from the client is not a true end-to-end streaming implementation, and is only appropriate for handling uploads of small files.

CLI for deploy and run code in azure

1 | > az appservice plan create \ |

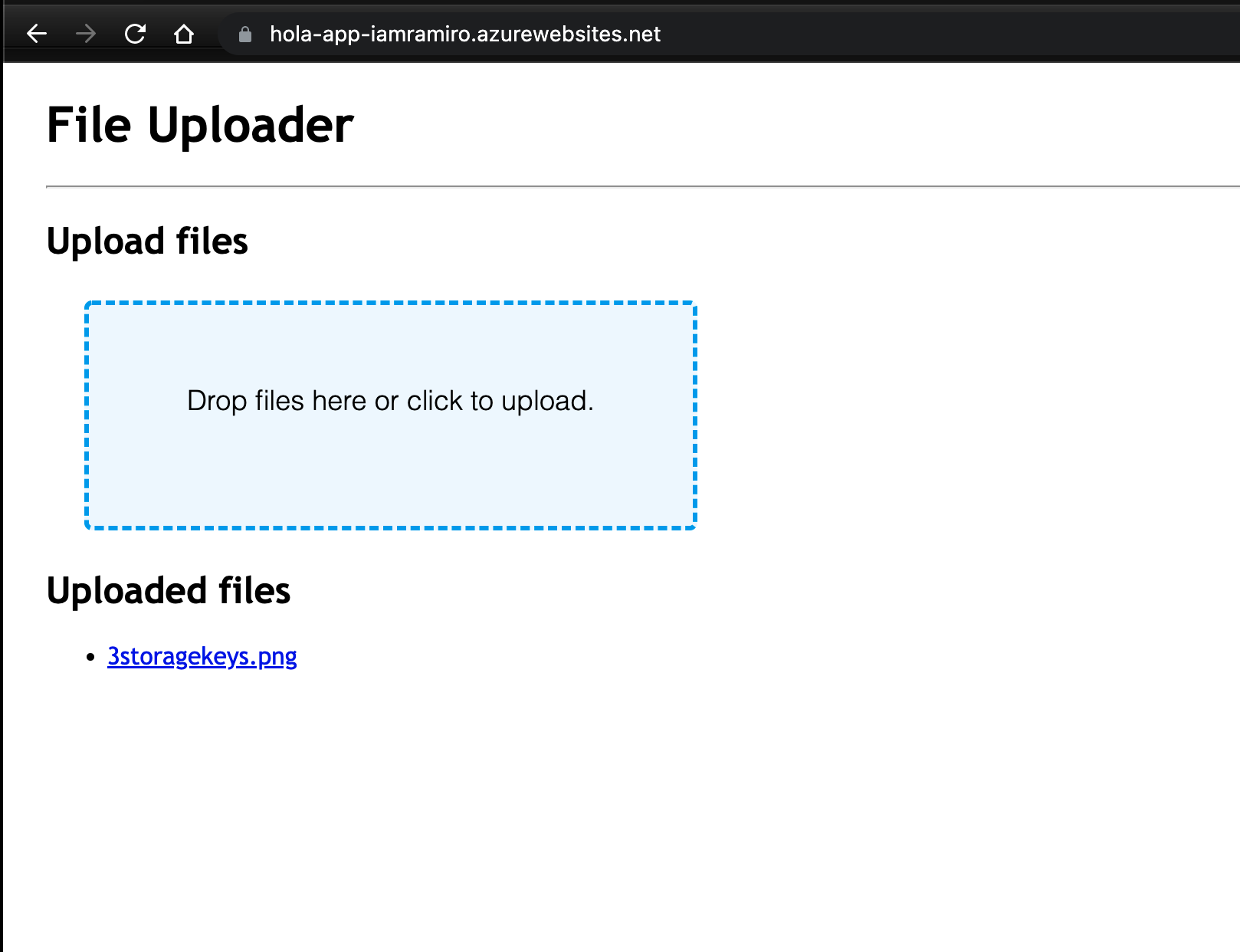

In the following image, the web app shows the blobs in the storage account.

Further Reading

Index data from Azure Blob Storage using Azure Cognitive Search

Naming and Referencing Containers, Blobs, and Metadata

Security recommendations for Blob storage

Configure an ASP.NET Core app for Azure App Service

Azure Virtual Machines [IaaS]

Before the cloud arrives, exist the on-premise servers. Those servers inside the company run all the company infrastructure, from databases to web servers. But, having those severs in the physical location can be a problem. One problem can be the scalability and heavy load under peaks of usage. For that reason, companies have started to explore cloud computing as a solution for many problems related to having a server in a physical location.

With Azure VMs, you have total control over the configuration, and you can install whatever you need. You don’t need to install physical hardware when you need to scale your application, and you can have additional services like monitoring and security.

You still need to maintain the VMs, like manage updates and patches on the OS, Performance, and Disk Space Usage. Just like if you were using a physical server. Each VM contains a collection of components such as Network Interfaces, Disks, IP Addresses, and Network Security Groups, and all those components do the same job as the Physical component but with Software.

You can size your VMs based on the amount of CPU, RAM, and Disk Space you need. All VMs contain two disks (Temporal Data and OS), and you can add more disks if required.

When creating VMs, you need to attach a Virtual Network Interface. This network connection will be used to communicate with the VMs inside your private network or with the Internet with Public IP.

When you going to create a new VM, you need to know what Ports do you need to open, what OS you want to use, and what is the size of the disk or disks, and the size of the VM in terms of CPU , memory and disk performance.

Networking

Before we create the VM, we need to create a Virtual Network. So, it’s like a private LAN network. By default, services outside the virtual network cannot connect to services within the virtual network. You can, however, configure the network to allow access to the external service, including your on-premises servers.

When you set up a virtual network, you specify the available address spaces, subnets, and security. If the VNet will be connected to other VNets, you must select address ranges that are not overlapping.

In terms of security, you can set yo the Network Secrity Group to allow control the trafic to the VMs. It’s like a firewall and you can apply custom rules for inbound or outbound requests.

Let’s create the VM

One important fact after create the VM is that you need to select an appropiate name for the VM. The name must be unique and it must be a valid DNS name, but more important, have a useful meaning to you. Let’s check the following table.

| Element | Example | Notes |

|---|---|---|

| Environment | dev, prod, QA | Identifies the environment for the resource |

| Location | uw (US West), ue (US East) | Identifies the region into which the resource is deployed |

| Instance | 01, 02 | For resources that have more than one named instance (web servers, etc.) |

| Product or Service | service | Identifies the product, application, or service that the resource supports |

| Role | sql, web, messaging | Identifies the role of the associated resource |

For example, devusc-webvm01 might represent the first development web server hosted in the US South Central location.

So, when you create the VM, there are several resources around it:

- The VM itself

- Storage account for the disks

- Virtual network (shared with other VMs and services)

- Network interface to communicate on the network

- Network Security Group(s) to secure the network traffic

- Public Internet address (optional)

You can create the VM in the Closest location for you, to avoid latency. Somethings it’s regarding legal compliance or tax requirements.

There are several size of VMs, you can find it group by his purpose. Let’s take a look:

| Option | Description | Sizes |

|---|---|---|

| General purpose | General-purpose VMs are designed to have a balanced CPU-to-memory ratio. Ideal for testing and development, small to medium databases, and low to medium traffic web servers. | B, Dsv3, Dv3, DSv2, Dv2 |

| Compute optimized | Compute optimized VMs are designed to have a high CPU-to-memory ratio. Suitable for medium traffic web servers, network appliances, batch processes, and application servers. | Fsv2, Fs, F |

| Memory optimized | Memory optimized VMs are designed to have a high memory-to-CPU ratio. Great for relational database servers, medium to large caches, and in-memory analytics. | Esv3, Ev3, M, GS, G, DSv2, Dv2 |

| Storage optimized | Storage optimized VMs are designed to have high disk throughput and IO. Ideal for VMs running databases. | Ls |

| GPU | GPU VMs are specialized virtual machines targeted for heavy graphics rendering and video editing. These VMs are ideal options for model training and inferencing with deep learning. | NV, NC, NCv2, NCv3, ND |

| High performance computes | High performance compute is the fastest and most powerful CPU virtual machines with optional high-throughput network interfaces. | H |

You can resize your VM as long as you need it. The price is computed per minute of usage. If the VM is stopped/deallocated and you aren’t billed for the running VM, you will be charged for the storage used by the disks.

The VM will have at least two virtual hard disks (VHDs). The first disk stores the operating system, and the second is used as temporary storage, so, after reboot, all data will be lost. The data for each VHD is held in Azure Storage as page blobs, which allows Azure to allocate space only for the storage you use. It’s also how your storage cost is measured; you pay for the storage you are consuming. You can select Standard or Premium storage for your VM.

In Linux, those hard disks (VHDs) will be mounted in the following paths:

The operating system disk: This is your primary drive, and it has a maximum capacity of 2048 GB. It will be labeled as

/dev/sdaby default.A temporary disk: This provides temporary storage for the OS or any apps. On Linux virtual machines, the disk is

/dev/sdband is formatted and mounted to/mntby the Azure Linux Agent. It is sized based on the VM size and is used to store the swap file.

NOTE: The temporary disk is not persistent. You should only write data to this disk that is not critical to the system.

And finally, choose the OS you want to use. You can choose between Windows, Linux, Ubuntu, CentOS, Debian, and Custom. Yes, Custom. You can search in the Azure Marketplace for more sophisticated install images or create your disk image with your need and upload it to Azure Storage and use it to create a Virtual VM. Only 64-bit operating systems are supported.

You can create VMs with Azure CLI. So, you can create automated scripts and run them on your VMs. Infrastructure as a Code [IaC] is the way to simply define, preview and deploy cloud infrastructure by using a template language. Some of the tools available for IaC are:

- ARM templates

- Terraform

- Ansible

- Jenkins

- Cloud-init

Creating the VMs with the Azure Portal is okay for maybe one or two VMs. But what if you want to create a copy of a VM? It’s time-consuming. So, The Resource Manager template came into action. You can create a resource template for your VM from the VM menu, under **Automation and click Export Template.

Let’s create a VM with Azure CLI.

1 | az vm create \ |

You can event create a VM using the SDK Microsoft.Azure.Management.Fluent in the NuGet package.

1 |

|

After you create the VM, you can use the Azure VM Extensions you install additional software on the VM after the initial deployment. You want this task to use a specific configuration, monitored, and executed automatically.

Azure Automation services

If the infrastructure is big enough, you can use Azure Automation services to automate the tasks like Process automation, configuration management, and update management. Let’s take a look at the following table:

| Service | Description |

|---|---|

| Process Automation. | Let’s assume you have a VM that is monitored for a specific error event. You want to take action, and fix the problem as soon as it’s reported. Process automation enables you to set up watcher tasks that can respond to events that may occur in your datacenter. |

| Configuration Management. | Perhaps you want to track software updates that become available for the operating system that runs on your VM. There are specific updates you may want to include or exclude. Configuration management enables you to track these updates, and take action as required. You use Microsoft Endpoint Configuration Manager to manage your company’s PC, servers, and mobile devices. You can extend this support to your Azure VMs with Configuration Manager. |

| Update Management. | This is used to manage updates and patches for your VMs. With this service, you’re able to assess the status of available updates, schedule installation, and review deployment results to verify updates applied successfully. Update management incorporates services that provide process and configuration management. You enable update management for a VM directly from your Azure Automation account. You can also enable update management for a single virtual machine from the virtual machine pane in the portal. |

Availability sets

Microsoft offers a 99.95% external connectivity service level agreement (SLA) for multiple-instance VMs deployed in an availability set. That means that for the SLA to apply, there must be at least two instances of the VM deployed within an availability set.

Manage the availability of your Azure VMs

Backup the VM

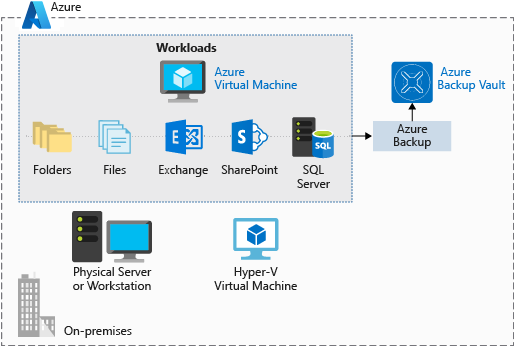

Azure Backup is a backup as a service offering that protects physical or virtual machines no matter where they reside: on-premises or in the cloud.

Azure Backup can be used for a wide range of data backup scenarios, such as:

- Files and folders on Windows OS machines (physical or virtual, local or cloud)

- Application-aware snapshots (Volume Shadow Copy Service)

- Popular Microsoft server workloads such as Microsoft SQL Server, Microsoft SharePoint, and Microsoft Exchange

- Native support for Azure Virtual Machines, both Windows, and Linux

- Linux and Windows 10 client machines

Create Linux VM

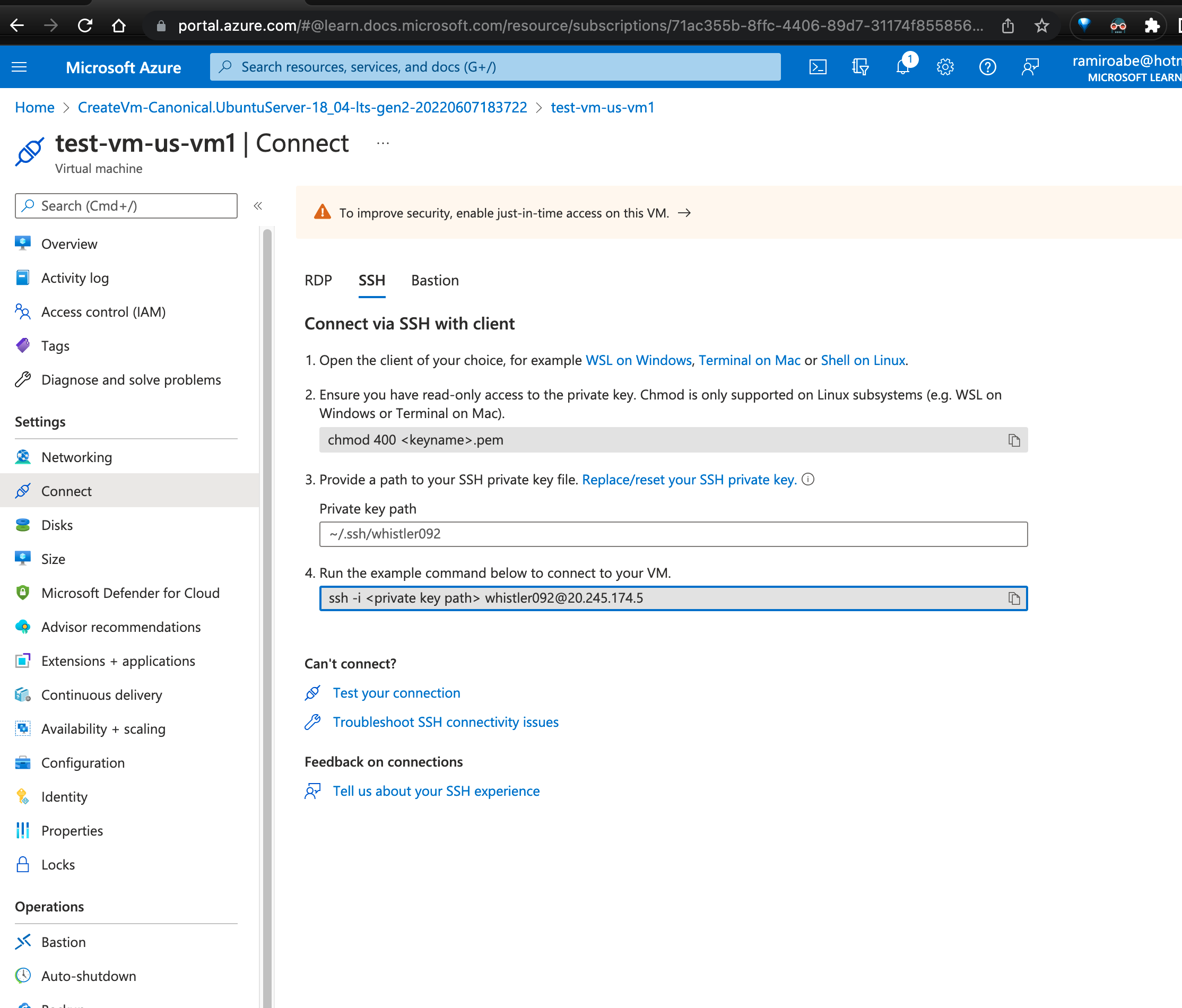

For Linux VMs, if you want remote access to the VM, you need to connect through SSH. It’s an encrypted connection protocol that allows secure sign-ins over unsecured connections. SSH allows you to connect to a terminal shell from a remote location using a network connection.

There are two ways to authenticate in an SSH connection: username and password or SSH key pair. Although SSH provides an encrypted connection, using passwords with SSH connections leaves the VM vulnerable to brute-force attacks of passwords. A more secure and preferred method of connecting to a Linux VM with SSH is a public-private key pair, also known as SSH keys.

So, let’s create an SSH key pair:

1 | > ssh-keygen \ |

Use the SSH key when creating a Linux VM. To apply the SSH key while creating a new Linux VM, you will need to copy the contents of the public key and supply it to the Azure Portal, or supply the public key file to the Azure CLI or Azure PowerShell command.

If you have already created the Linux VM, you can install the public key onto your Linux VM with the ssh-copy-id command.

1 | > ssh-copy-id -i ~/.ssh/id_rsa.pub azureuser@myserver |

Connect to the VM with SSH

To connect to the VM via SSH, you need the following items:

- Public IP address of the VM

- Username of the local account on the VM

- Public key configured in that account

- Access to the corresponding private key

- Port 22 open on the VM

Perform the following steps to connect to the VM:

1 | > chmod 400 ~/.ssh/id_ssh2.pem |

Initialize data disks

Any additional drives you create from scratch need to be initialized and formatted. The process for initializing is identical to a physical disk.

1 | > dmesg | grep SCSI |

Now you have attached the new disk to the VM. Let’s install a webserver:

1 | > sudo apt-get update |

The service is running, but by default, all ports are closed. Let’s open port 80 creating a network security group, and add an inbound rule allowing traffic on port 80.

But, what is a network security group? Virtual networks (VNets) are the foundation of the Azure networking model, and provide isolation and protection. Network security groups (NSGs) are the primary tool you use to enforce and control network traffic rules at the networking level. NSGs are an optional security layer that provides a software firewall by filtering inbound and outbound traffic on the VNet.

Security groups can be associated to a network interface (for per host rules), a subnet in the virtual network (to apply to multiple resources), or both levels.

When we created the VM, we selected the inbound port SSH so we could connect to the VM. This created an NSG that’s attached to the network interface of the VM. That NSG is blocking HTTP traffic. Let’s update this NSG to allow inbound HTTP traffic on port 80.

Let’s go to the VM and go to Settings -> Networking, and you should see your NSG associated. Click Add inbound port rule and select the service HTTP, and it should select port 80.

Let’s create a new Application

1 | > az group create \ |

Align requirements with cloud types and service models in Azure

Azure solutions for public, private, and hybrid cloud

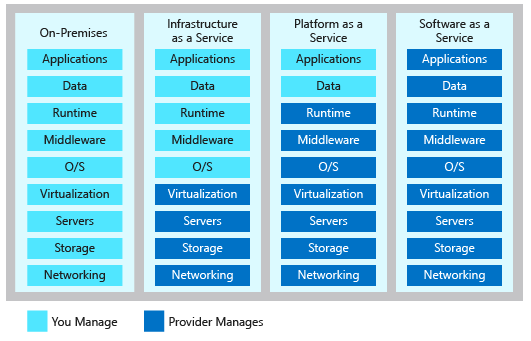

Cloud computing resources are delivered using three different service models.

- Infrastructure-as-a-service (IaaS) provides instant computing infrastructure that you can provision and manage over the Internet.

- Platform as a service (PaaS) provides ready-made development and deployment environments that you can use to deliver your own cloud services.

- Software as a service (SaaS) delivers applications over the Internet as a web-based service.

Control Azure services with the CLI

The UI works fine, but for tasks that need to be repeated daily or even hourly, using the command line and a set of tested commands or scripts can help get your work done more quickly and avoid errors.

Let’s check some useful commands.

1 | > brew update |

Find commands

1 | > az find blob |

Login

1 | > az login |

Create a Resource Group

1 | > az group create --name <name> \ |

Exercise - Create an Azure website using the CLI

1 | > export RESOURCE_GROUP=learn-c35d9ea4-f957-42d7-835b-4ba0dd378a50 |

Automate Azure tasks using scripts with PowerShell

Creating administration scripts is a powerful way to optimize your work flow. You can automate common, repetitive tasks. Once a script has been verified, it will run consistently, likely reducing errors.

TODO:

Tutorial: Deploy an ASP.NET Core and Azure SQL Database app to Azure App Service